In this guide, learn everything you need to know about cross-browser testing, including examples, a comparison of different implementation options and how you can get started with cross-browser testing today.

Table of contents

- What is Cross Browser Testing?

- Why is Cross Browser Testing Important?

- How to Implement Cross Browser Testing

- Cross Browser Testing Techniques

- Modern, AI-Based Cross Browser Testing Solution: Applitools Ultrafast Test Cloud

- Cross Browser Testing Tools and Applitools Visual AI

- Pro and Cons of Each Technique (Table of Comparison)

- My Learning from this Experience

- Summary

What is Cross Browser Testing?

Cross Browser Testing is a testing method for validating that the application under test works as expected on different browsers, at varying viewport sizes, and devices. It can be done manually or as part of a test automation strategy. The tooling required for this activity can be built in-house or provided by external vendors.

Why is Cross Browser Testing Important?

When I began in QA I didn’t understand why cross-browser testing was important. But it quickly became clear to me that applications frequently render differently at different viewport sizes and with different browser types. This can be a complex issue to test effectively, as the number of combinations required to achieve full coverage can become very large.

A Cross Browser Testing Example

Here’s an example of what you might look for when performing cross-browser testing. Let’s say we’re working on an insurance application. I, as a user, should be able to view my insurance policy details on the website, using any browser on my laptop or desktop.

This should be possible while ensuring:

- The features remain the same

- The look and feel, UI or cosmetic effects are the same

- Security standards are maintained

How to Implement Cross Browser Testing

There are various aspects to consider while implementing your cross-browser testing strategy.

Understand the scope == Data!

“Different devices and browsers: chrome, safari, firefox, edge”

Thankfully IE is not in the list anymore (for most)!

You should first figure out the important combinations of devices and browsers and viewport sizes your userbase is accessing your application from.

PS: Each team member should have access to the analytics data of the product to understand patterns of usage of the product. This data, which includes OS, browser details (type, version, viewport sizes) are essential to plan and test proactively, instead of later reacting to situations (= defects).

This will tell you the different browser types, browser versions, devices, viewport sizes you need to consider in your testing and test automation strategy.

Cross Browser Testing Techniques

There are various ways you can perform cross-browser testing. Let’s understand them.

Local Setup -> On a Single (Dev / QA Machine)

We usually have multiple browsers on our laptop / desktops. While there are other ways to get started, it is probably simplest to start implementing your cross browser tests here. You also need a local setup to enable debugging and maintaining / updating the tests.

If mobile-web is part of the strategy, then you also need to have the relevant setup available on local machines to enable that.

Setting up the Infrastructure

While this may seem the easiest, it can get out of control very quickly.

Examples:

- You may not be able to install all supported browsers on your computer (ex: Safari is not supported on Windows OS).

- Browser vendors keep releasing new versions very frequently. You need to keep your browser drivers in sync with this.

- Maintaining / using older versions of the browsers may not be very straightforward.

- If you need to run tests on mobile devices, you may not have access to all the variety of devices. So setting up local emulators may be a way to proceed.

The choices can actually vary based on the requirements of the project and on a case by case basis.

As alternatives, we have the liberty to choose and create either an in-house testing solution, or go for a platform / license / third party tool to support our device farm needs.

In-House Setup of Central Infrastructure

You can set up a central infrastructure of browsers and emulators or real devices in your organization that can be leveraged by the teams. You will also need some software to manage the usage and allocation of these browsers and devices.

This infrastructure can potentially be used in the following ways:

- Triggered from local machine

Tests can be triggered from any dev / QA machine to run on the central infrastructure.

- For CI execution

Tests triggered via Continuous Integration (CI), like Jenkins, CircleCI, Azure DevOps, TeamCity, etc. can be run against browsers / emulators setup on the central infrastructure.

Cloud Solution

You can also opt to run the tests against browsers / devices in a cloud-based solution. You can select different device / browser options offered by various providers in the market that give you the wide coverage as per your requirements, without having to build / maintain / manage the same. This can also be used to run tests triggered from local machines, or from CI.

Modern, AI-Based Cross Browser Testing Solution: Applitools Ultrafast Test Cloud

It is important to understand the evolution of browsers in recent years.

- They have started conforming to the W3C standard.

- They seem to have started adopting Continuous Delivery – well, at least releasing new versions at a very fast pace, sometimes multiple versions a week.

- In a major development a lot of major browsers are adopting and building on the Chromium codebase. This makes these browsers very similar, except the rendering part – which is still pretty browser specific.

We need to factor this change in our cross browser testing strategy.

In addition, AI-based cross-browser testing solutions are becoming quite popular, which use machine learning to help scale your automation execution and get deep insights into the results – from a functional, performance and user-experience perspective.

To get hands-on experience in this, I signed-up for a free Applitools account, which uses a powerful Visual AI, and implemented a few tests using this tutorial as a reference.

How Does Applitools Visual AI Work as a Solution for Cross Browser Testing

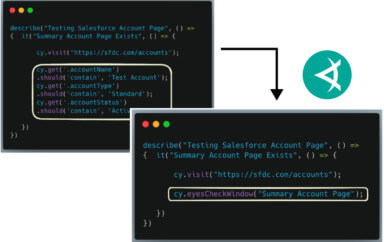

Integration with Applitools

Integrating Applitools with your functional automation is extremely easy. Simply select the relevant Applitools SDK based on your functional automation tech stack from here, and follow the detailed tutorial to get started.

Now, at any place in your test execution where you need functional or visual validation, add methods like eyes.checkWindow(), and you are set to run your test against any browser or device of your choice.

Reference: https://applitools.com/tutorials/overview/how-it-works.html

AI-Based Cross Browser Testing

Now you have your tests ready and running against a specific browser or device, scaling for cross-browser testing is the next step.

What if I told you with just the addition of the different device combinations, you can leverage the same single script to give you the functional and visual test results on the variety of combinations specified, covering the cross browser testing aspect as well.

Seems too far-fetched?

It isn’t. That is exactly what Applitools Ultrafast Test Cloud does!

The addition of lines of code below will do the magic. You can also go about changing the configurations, as per your requirements.

(Below example is from the Selenium-Java SDK. Similar configuration can be supplied for the other SDKs.)

// Add browsers with different viewports

config.addBrowser(800, 600, BrowserType.Chrome);

config.addBrowser(700, 500, BrowserType.FIREFOX);

config.addBrowser(1600, 1200, BrowserType.IE_11);

config.addBrowser(1024, 768, BrowserType.EDGE_CHROMIUM);

config.addBrowser(800, 600, BrowserType.SAFARI);

// Add mobile emulation devices in Portrait mode

config.addDeviceEmulation(DeviceName.iPhone_X, ScreenOrientation.PORTRAIT;

config.addDeviceEmulation(DeviceName.Pixel_2, ScreenOrientation.PORTRAIT;

// Set the configuration object to eyes

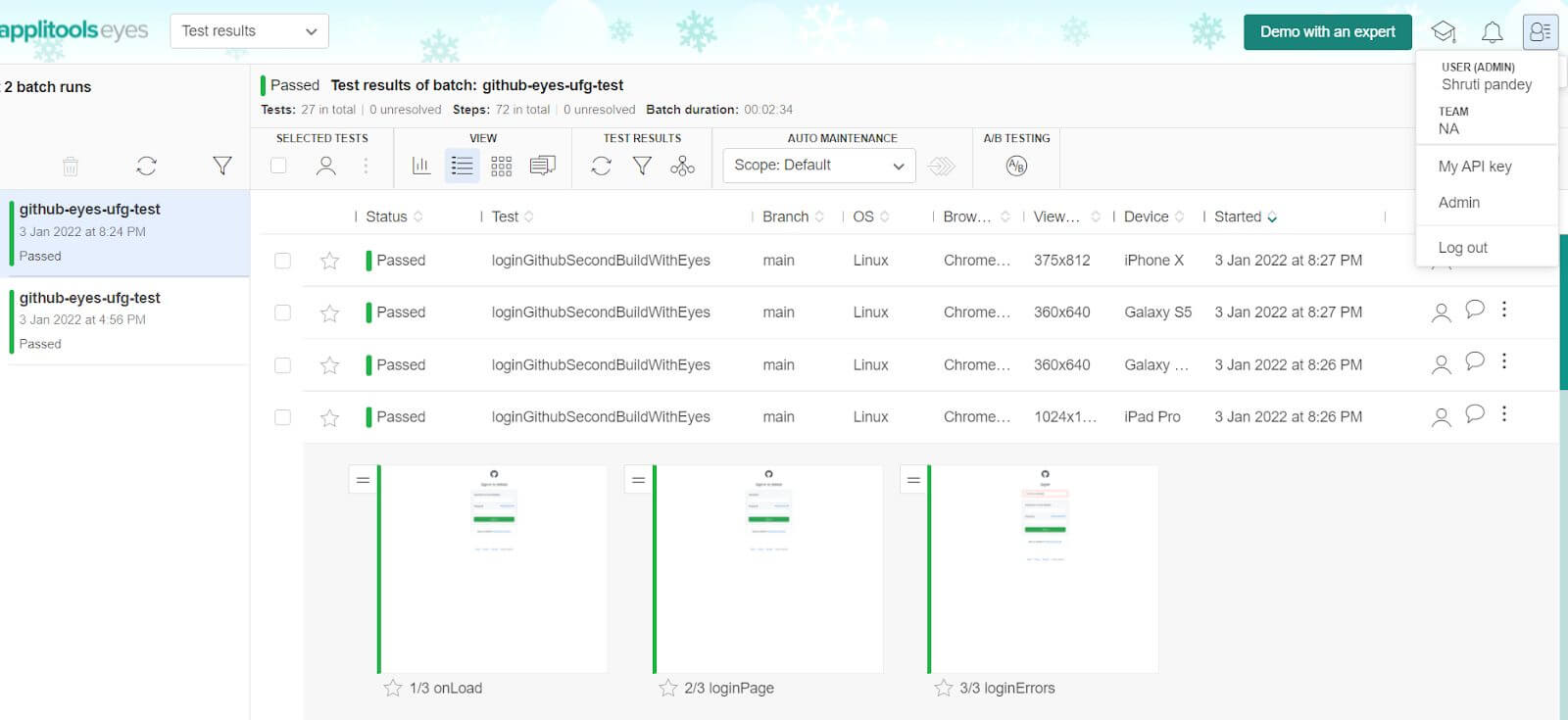

eyes.setConfiguration(config);Now when you run the test again, say against Chrome browser on your laptop, in the Applitools dashboard, you will see results for all the browser and device combinations provided above.

You may be wondering, the test ran just once on the Chrome browser. How did the results from all other browsers and devices come up? And so fast?

This is what Applitools Ultrafast Grid (a part of the Ultrafast Test Cloud) does under the hood:

- When the test starts, the browser configuration is passed from the test execution to the Ultrafast Grid.

- For every

eyes.checkWindowcall, the information captured (DOM, CSS, etc.) is sent to the Ultrafast Grid. - The Ultrafast Grid will render the same page / screen on each browser / device provided by the test – (think of this as playing a downloaded video in airplane mode).

- Once rendered in each browser / device, a visual comparison is done and the results are sent to the Applitools dashboard.

What I like about this AI-based solution, is that:

- I create my automation scripts for different purposes – functional, visual, cross browser testing, in one go

- There is no need of maintaining devices

- There is no need to create different set-ups for different types of testing

- The AI algorithms start providing results from the first run – “no training required”

- I can leverage the solution on any kind of setup

- i.e. running the scripts through my IDE, terminal, or CI/CD

- I can leverage the solution for web, mobile web, and native apps

- I can integrate Visual Testing results in as part of my CI execution

- Rich information available in the dashboard including ease of updating the baselines, doing Root Cause Analysis, reporting defects in Jira or Rally, etc.

- I can ensure there are no Contrast issues (part of Accessibility testing) in my execution at scale

Here is the screenshot of the Applitools dashboard after I ran my sample tests:

Cross Browser Testing Tools and Applitools Visual AI

The Ultrafast Test Grid and Applitools Visual AI can be integrated into many popular and free and open source test automation frameworks to easily supercharge their effectiveness as cross-browser testing tools.

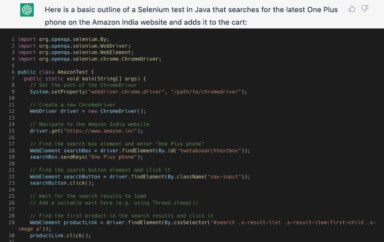

Cross Browser Testing in Selenium

As you saw above in my code sample, Ultrafast Grid is compatible with Selenium. Selenium is the most popular open source test automation framework. It is possible to perform cross browser testing with Selenium out of the box, but Ultrafast Grid offers some significant advantages. Check out this article for a full comparison of using an in-house Selenium Grid vs using Applitools.

Cross Browser Testing in Cypress

Cypress is another very popular open source test automation framework. However, it can only natively run tests against a few browsers at the moment – Chrome, Edge and Firefox. The Applitools Ultrafast Grid allows you to expand this list to include all browsers. See this post on how to perform cross-browser tests with Cypress on all browsers.

Cross Browser Testing in Playwright

Playwright is an open source test automation framework that is newer than both Cypress and Selenium, but it is growing quickly in popularity. Playwright has some limitations on doing cross-browser testing natively, because it tests “browser projects” and not full browsers. The Ultrafast Grid overcomes this limitation. You can read more about how to run cross-browser Playwright tests against any browser.

Pro and Cons of Each Technique (Table of Comparison)

| Local Setup | In-House Setup | Cloud Solution | AI-Based Solution (Applitools) | |

|---|---|---|---|---|

| Infrastructure | Pros: Fast feedback on local machine Cons: Needs to be repeated for each machine where the tests need to execute All configurations cannot be set up locally | Pros: No inbound / outbound connectivity required Cons: Needs considerable effort to set up, maintain and update the infrastructure on a continued basis | Pros: No efforts required build / maintain / update the infrastructure Cons: Needs inbound and outbound connectivity from internal network Latency issues may be seen as requests are going to cloud based browsers / devices | Pros: No effort required to setup |

| Setup and Maintenance | To be taken care of by each team member from time to time; including OS/ Browser version updates | To be taken care of by the internal team from time to time; including OS/ Browser version updates | To be taken care of by the service provider | To be taken care of by the service provider |

| Speed of Feedback | Slowest, as all dependencies to be taken care of, and test needs to be repeated for each browser / device combination | Depends on concurrent usage due to multiple test runs | Depends on network latency Network issues may cause intermittent failures Depends on reliability and connectivity of the service provider | Fast and seamless scaling |

| Security | Best as in-house, using internal firewalls, vpns, network and data storage | Best as in-house, using internal firewalls, vpns, network and data storage | High Risk: Needs inbound network access from service provider to the internal test environments. Browsers / devices will have access to the data generated by running the test – cleanup is essential. No control who has access to the cloud service provider infrastructure, and if they access your internal resources. | Low risk. There is no inbound connection to your internal infrastructure. Tests are running on internal network – so no data on Applitools server (other than screenshots used for comparison with baseline) |

My Learning from this Experience

- A good cross browser testing strategy allows you to reduce the risk of functionality and visual experience not working as expected on the browsers and devices used by your users. A good strategy will also optimize the testing efforts required to do this. To allow this, you need data to provide the insights from your users.

- Having a holistic view of how your team will be leveraging cross browser testing (ex: manual testing, automation, local executions, CI-based execution, etc.) is important to know before you start off with your implementation.

- Sometimes the easiest way may not be the best – ex: Using the browsers on your computer to automate against that will not scale. At the same time, using technology like Applitools Ultrafast Test Cloud is very easy – you end up writing less code and get increased functional and visual coverage at scale.

- You need to think about the ROI of your approach and if it achieves the objectives of the need for cross browser testing. ROI calculation should include:

- Effort to implement, maintain, execute and scale the tests

- Effort to set up, and maintain the infrastructure (hardware and software components)

- Ability to get deterministic & reliable feedback from from test execution

Summary

Depending on your project strategy, scope, manual or automation requirements and of course, the hardware or infrastructure combinations, you should make a choice that not only suits the requirements but gives you the best returns and results.

Based on my past experiences, I am very excited about the Applitools Ultrafast Test Cloud – a unique way to scale test automation seamlessly. In the process, I ended up writing less code, and got amazingly high test coverage, with very high accuracy. I recommend everyone to try this and experience it themselves!

Get Started Today

Want to get started with Applitools today? Sign up for a free account and check out our docs to get up and running today, or schedule a demo and we’ll be happy to answer any questions you may have.

Editor’s Note: This post was originally published in January 2022, and has been updated for accuracy and completeness.