If you read my previous blog, Fast Testing Across Multiple Browsers, you know that participants in the Applitools Ultrafast Cross Browser Hackathon learned the following:

- Applitools Ultrafast Grid requires an application test to be run just once. Legacy approaches require repeating tests for each browser, operating system, and viewport size of interest.

- Cross browser tests and analysis complete typically within 10 minutes, meaning that test times match the scale of application build times. Legacy test and analysis times involve several hours to generate results

- Applitools makes it possible to incorporate cross browser tests into the build process, with both speed and accuracy.

Today, we’re going to talk about another benefit of using Applitools Visual AI and Ultrafast Grid: test code stability.

What is Test Code Stability?

Test code stability is the property of test code continuing to give consistent and appropriate results over time. With stable test code, tests that pass continue to pass correctly, and tests that fail continue to fail correctly. Stable tests do not generate false positives (report a failure in error) or generate false negatives (missing a failure).

Stable test code produces consistent results. Unstable test code requires maintenance to address the sources of instability. So, what causes test code instability?

Anand Bagmar did a great review of the sources of flaky tests. Some of the key sources of instability:

- Race conditions – you apply inputs too quickly to ensure a consistent output

- Ignoring settling time – your output becomes stable only after your sampling time

- Network delay – your network infrastructure causes unexpected behavior

- Dynamic environments – your inputs cannot guarantee all the outputs

- Incompletely scoped test conditions – you have not specified the correct changes

- Myopia – you only look for expected changes and actual changes occur elsewhere

- Code changes – your code uses obsolete controls or measures obsolete output.

When you develop tests for an evolving application, code changes introduce the most instability in your tests. UI tests, whether testing the UI or complete end-to-end behavior, depends on the underlying UI code. You use your knowledge of the app code to build the test interfaces. Locator changes – whether changes to coded identifiers or CSS or Xpath locators – can cause your tests to break.

When test code depends on the App code, each app release will require test maintenance. Otherwise, no engineer can ensure that a “passing” test omitted an actual failure, or that a “failing” test indicates a real failure and not a locator change.

Test Code Stability and Cross Browser Testing

Considering the instability sources, a tester like you takes on a huge challenge with cross browser tests. You need to ensure that your cross browser test infrastructure addresses these sources of instability so that your cross browser behavior matches expected results.

If you use a legacy approach to cross browser testing, you need to ensure that your physical infrastructure does not introduce network or other infrastructure sources of test flakiness. Part of your maintenance ensures that your test infrastructure does not become a source of false positives or false negatives.

Another check you make relates to responsive app design. How do you ensure responsive app behavior? How do you validate page location based on viewport size?

If you use legacy approaches, you spend a lot of time ensuring that your infrastructure, your tests, and your results all match expected app user behavior. In contrast, the Applitools approach does not require debugging and maintenance of multiple test infrastructures, since the purpose of the test involves ensuring proper rendering of server response.

Finally, you have to account for the impact of every new app coding change on your tests. How do you update your locators? How do you ensure that your test results match your expected user behavior?

Improving Stability: Limiting Dependency on Code Changes

One thing we have observed over time: code changes drive test code maintenance. We demonstrated this dependency relationship in the Applitools Visual AI Rockstar Hackathon, and again in the Applitools Ultrafast Cross Browser Hackathon.

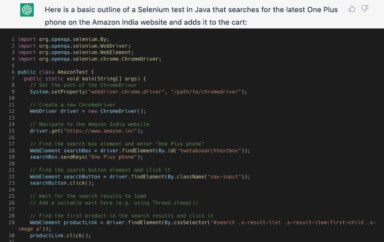

The legacy approach uses locators to both apply test conditions and measure application behavior. As locators can change from release to release, test authors must consider appropriate actions.

Many teams have tried to address the locator dependency in test code.

Some test developers sit inside the development team. They create their tests as they develop their application, and they build the dependencies into the app development process. This approach can ensure that locators remain current. On the flip side, they provide little information on how the application behavior changes over time.

Some developers provide a known set of identifiers in the development process. They work to ensure that the UI tests use a consistent set of identifiers. These tests can run the risk of myopic inspection. By depending on supplied identifiers – especially to measure application behavior, these tests run the risk of false negatives. While the identifiers do not change, they may no longer reflect the actual behavior of the application.

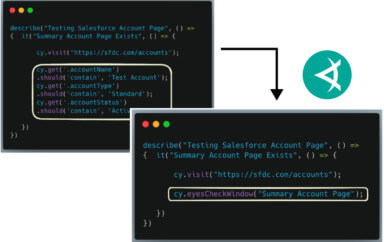

The modern approach limits identifier use to applying test conditions. Applitools Visual AI measures the application response of the UI. This approach still depends on identifier consistency – but with way fewer identifiers. In both hackathons, participants cut their dependence on identifiers by 75% to 90% – basically, they used way fewer identifiers. Their code ran more consistently and required less maintenance.

Implications of Modern Cross Browser Testing

Applitools Ultrafast Grid overcomes many of the hurdles that testers experience running legacy cross browser test approaches. Beyond the pure speed gains, Applitools offers improved stability and reduced test maintenance.

Modern cross browser testing reduces dependency on locators. By using Visual AI instead of locators to measure application response, Applitools Ultrafast Grid can show when an application behavior has changed – even if the locators remain the same. Or, alternatively, Ultrafast Grid can show when the behavior remains stable even though locators have changed. By reducing dependency on locators, Applitools ensures a higher degree of stability in test results.

Also, Applitools Ultrafast Grid reduces infrastructure setup and maintenance for cross browser tests. In the legacy setup, each unique browser requires its own setup and connection to the server. Each setup can have physical or other failure modes that must be identified and isolated independent of the application behavior. By capturing the response from a server once and validating the DOM across other target browsers, operating systems, and viewport sizes, Applitools reduces the infrastructure debug and maintenance efforts.

Conclusions

Participant feedback from the Hackathon provided us with consistent views on cross browser testing. From their perspective, participants viewed legacy cross browser tests as:

- Likely to break on an app update

- Susceptible to infrastructure problems

- Expensive to maintain over time

In contrast, they saw Applitools Ultrafast Grid as:

- Less expensive to maintain

- More likely to expose rendering errors

- Providing more consistent results.

You can read the entire report here.

What’s Next

What holds companies back from cross browser testing? Bad experiences getting results. But, what if they could get good test results and have a good experience at the same time? We ask participants about their experience on the Applitools Cross Browser Hackathon.