In June Applitools invited any and all to its “Ultrafast Grid Hackathon”. Participants tried out the Applitools Ultrafast Grid for cross-browser testing on a number of hands-on real-world testing tasks.

As a software tester of more than 6 years, the majority of my time was spent on Web projects. On these projects, cross-browser compatibility is always a requirement. Since we cannot control how our customers access websites, we have to do our best to validate their potential experience. We need to validate functionality, layout, and design across operating systems, browser engines, devices, and screen sizes.

Applitools offers an easy, fast and intelligent approach to cross browser testing that requires no extra infrastructure for running client tests.

Getting Started

We needed to demonstrate proficiency with two different approaches to the task:

- What Applitools referred to as modern tests (using their Ultrafast Grid)

- Traditional tests, where we would set up a test framework from scratch, using our preferred tools.

In total there were 3 tasks that needed to be automated, on different breakpoints, in all major desktop browsers, and on Mobile Safari:

- validating a product listing page’s responsiveness and layout,

- using the product listing page’s filters and validating that their functionality is correct

- validating the responsiveness and layout of a product details page

These tasks would be executed against a V1 of the website (considered “bug-free”) and would then be used as a regression pack against a V2 / rewrite of the website.

Setting up Cypress for the Ultrafast Grid

I chose Cypress as I wanted a tool where I could quickly iterate, get human-readable errors and feel comfortable. The required desktop browsers (Chrome, Firefox and Edge Chromium) are all compatible. The system under test was on a single domain, which meant I would not be disadvantaged choosing Cypress. None of Cypress’ more advanced features were needed (e.g. stubbing or intercepting network responses).

The modern cross browser tests were extremely easy to set up. The only steps required were two npm package installs (Cypress and the Applitools SDK) and running

npx eyes-setup

to import the SDK.

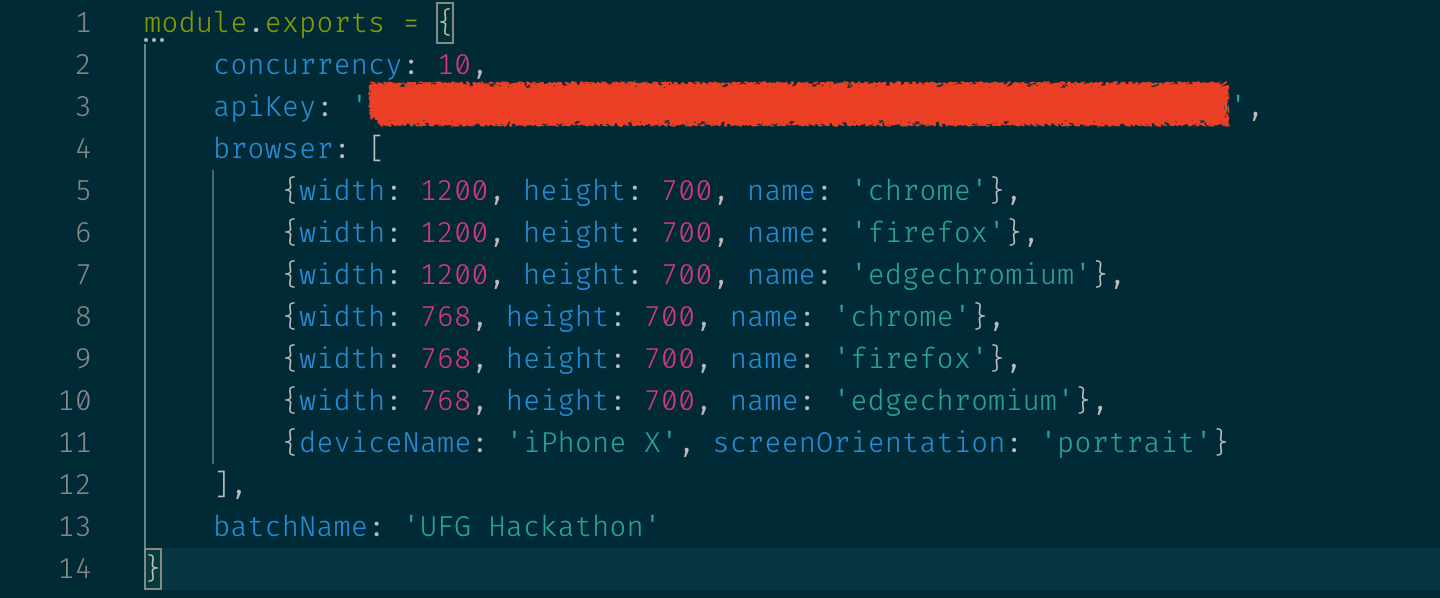

Easy cross browser testing means easy to maintain as well. Configuring the needed browsers, layouts and concurrency happened inside `applitools.config.js`, a mighty elegant approach to the many, many lines of capabilities that plague Selenium-based tools.

In total, I added three short spec files (between 23 and 34 lines, including all typical boilerplate). We were instructed to execute these tasks against the V1 website then mark the runs as our baselines. We would then perform the needed refactors to execute the tasks against the V2 website and mark all the bugs in Applitools.

Applitools’ Visual AI did its job so well, all I had to do was mark the areas it detected and do a write-up!

In summary, for the modern tests:

- two npm dependencies,

- a one-line initialisation of the Applitools SDK,

- 6 CSS selectors,

- 109 total lines of code,

- a 3 character difference to “refactor” the tests to run against a second website,

all done in under an hour.

Performing a visual regression for all seven different configurations added no more than 20 seconds to the execution time. It all worked as advertised, on the first try. That is the proof of easy cross browser testing

Setting up the traditional cross-browser tests

For the traditional tests I implemented features that most software testers are either used to or would implement themselves: a spec-file per layout, page objects, custom commands, (attempted) screenshot diff-ing, linting and custom logging.

This may sound like overkill compared to the above, but I aimed for feature parity and reached this end structure iteratively.

Unfortunately, neither one of the plug-ins I tried for screenshot diff-ing (`cypress-image-snapshot`, `cypress-visual-regression` and `cypress-plugin-snapshots`) gave results in any way similar to Applitools. I will not blame the plug-ins, though, as I had a limited amount of time to get everything working and most likely gave up way sooner than one should have.

Since screenshot diff-ing was off the table, I chose to check each individual element. In total, I ended up with 57 CSS selectors and to make future refactoring easier I implemented page objects. Additionally, I used a custom method to log test results to a text file, as this was a requirement for the hackathon.

I did not count all the lines of code in the traditional approach as the comparison would have been absurd, but I did keep track of the work needed to refactor for V2 — 12 lines of code, meaning multiple CSS selectors and assertions. This work does not need to be done if Applitools is used, “selector maintenance” just isn’t a thing!

Applitools will intelligently find every single visual difference between your pages, while traditionally you’d have to know what to look for, define it and define what the difference should be. Is the element missing? Is it of a different colour? A different font or font size? Does the button label differ? Is the distance between these two elements the same? All of this investigative work is done automatically.

Conclusion

All in all, it has genuinely been an eye-opening experience, as the tasks were similar to what we’d need to do “in the real world” and the total work done exceeds the scope of usual PoCs.

My thanks to everyone at Applitools for offering this opportunity, with a special shout out to Stas M.!

For More Information

- Sign up for a free Applitools account.

- Request a demo of the Applitools Ultrafast Grid.

- How Do You Catch More Bugs In Your End-To-End Tests?

- How I ran 100 UI tests in just 20 seconds

- The Impact of Visual AI on Test Automation Report

- Five Data-Driven Reasons To Add Visual AI To Your End-To-End Tests

Dan Iosif serves as SDET at Dunelm in the United Kingdom. He participated in the recently-completed Applitools Ultrafast Cross Browser Hackathon.