Earlier in my career (though it seems like yesterday), product teams I was a part of did everything “on-prem”, and angst-ridden code compiles took place every few months . We’d burn down bugs using manual QA and push to production after several long nights before taking a long nap and doing it all again next quarter.

This XKCD cartoon captures the life:

Today, many of you work on an incredibly efficient scrum team. You have a huge range of technologies at your command:

- Distributed container operation – perhaps Docker or Kubernetes.

- Continuous integration – through code repos like Github that track every trunk and branch.

- Integrated testing — logging what you find in Jira

- Collaborating real-time via Slack all along the way in hopes of catching bugs early and getting them fixed quickly.

- And, you might deploy continuously using Jenkins, Azure DevOps, CodeFresh, TeamCity or CircleCI.

As we develop more efficient code-delivery processes, we rely on tools that help us become more productive. That seems fair – as our peer software developers deliver products vital to the efficiency of other departments in our companies. Sales teams rely on Salesforce. Marketing teams depend on a Marketo or Hubspot. Designers need the Adobe Creative Suite. Product teams rely on Pendo or Mixpanel. We all use Slack. Reality is that teams in every part of the company must constantly find ways to be more efficient and more productive.

Seeking Quality Engineering Productivity Improvements

Software development took a giant leap in productivity when browsers started to standardize. Before then, developers struggled to build functional applications for web browsers, as each had its own behavior and quirks. It was almost easier to create apps for Mac OS and Windows – as you knew where each of those was supposed to run. But, with standardization on HTTP, CSS and JavaScript, web apps provided the promise of “code once, run everywhere.”

If you’re a quality engineer, you know that you’re on the hook to validate that the code does run everywhere. And, frankly, where is the tool that lets you test once and validate everywhere?

I’m not saying that quality engineers lack any productivity tools. Software test automation has helped quality engineers drive app behavior, from paid tools from companies like Mercury/HP to Jason Huggins creating the open-source Selenium Browser Automation. Selenium has been extended in multiple ways with various open source drivers, bindings, plugins, and frameworks including major Applitools contributions — the most substantial of which was a refactor of Selenium IDE including a plug-in for Visual AI to support far more efficient record and playback testing.

Everyone uses browser automation for testing. However, almost everyone uses hand-coded validation. Even companies that automate web element locator infrastructure to ensure consistent locators from build-to-build still need to write that code. Yes – you need locators to drive the application behavior. But, how many lines of validation code do you write for every test condition you set up?

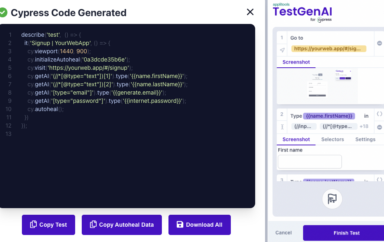

Code-based approaches continue to refine test control. Appium emerged in 2012 and is more vital than ever — especially for native mobile applications. And most recently Cypress.io modernized the code-based approach with features geared to managing quality earlier in the development cycle. You’re probably familiar with Test Automation University (a.k.a TAU). TAU now boasts over 40 free on-line courses for emerging quality engineering techniques that rely on code-based frameworks including an Introduction to Cypress by Gil Tayar, Selenium courses from Angie Jones, and an Appium course from Jonathan Lipps. But — you still need to ask yourself an important question.

Are Your Code-Based Tests Efficient Enough?

At Applitools no matter how you apply tests to your apps – including Selenium, Cypress, and/or Appium – we exist to help make you much more efficient.

According to The State of Automated Visual Testing report, the average business app requires 82,000 pages and screens, with the largest companies in the world managing over 600,000 pages and screens. Just writing the UI test controls for the behaviors you need to ensure can overwhelm you and your peers. That you have to maintain your results inspection code as well can inundate you.

If you are like many of your peers, you triage time vs. effort vs. coverage. You:

- Inspect what will change.

- Spend time – sometimes too much time – updating broken tests.

- Limit inspection – judiciously – for behavior changes you think unlikely.

And, to your chagrin, late stage UI bugs escape.

Using Visual Inspection

Visual page inspection has offered the promise of 100% visual coverage. What a great concept! Grab the whole page now and compare it to the last version and show me all the differences. Tens or hundreds of lines of page inspection code reduced to a single instruction to capture the screen. However, the reality of commercial and open-source visual test tools hasn’t seemed to match the promise. Visual test tools using pixel-diffing, or DOM inspectors using DOM-diffing, have shown themselves time and again to suffer from false positives – reporting false failures and adding extra work for you to track down whether the reported issue actually matters.

At Applitools, we recognized that pixel and DOM comparisons would never meet the need. We invented Visual AI – effectively giving you an assistant that tests the entire app the same way you would with your own eyes – but without ever tiring or ever missing anything. Visual AI radically changes that scenario by using images, not pixel diffs, DOM diffs, or hand-written inspection code, to validate all the elements of the UI in an incredibly accurate and code-efficient manner. You still need browser automation for navigation, but all other aspects of UI testing are relegated to super-efficient Visual AI.

What Is Test Code Efficiency?

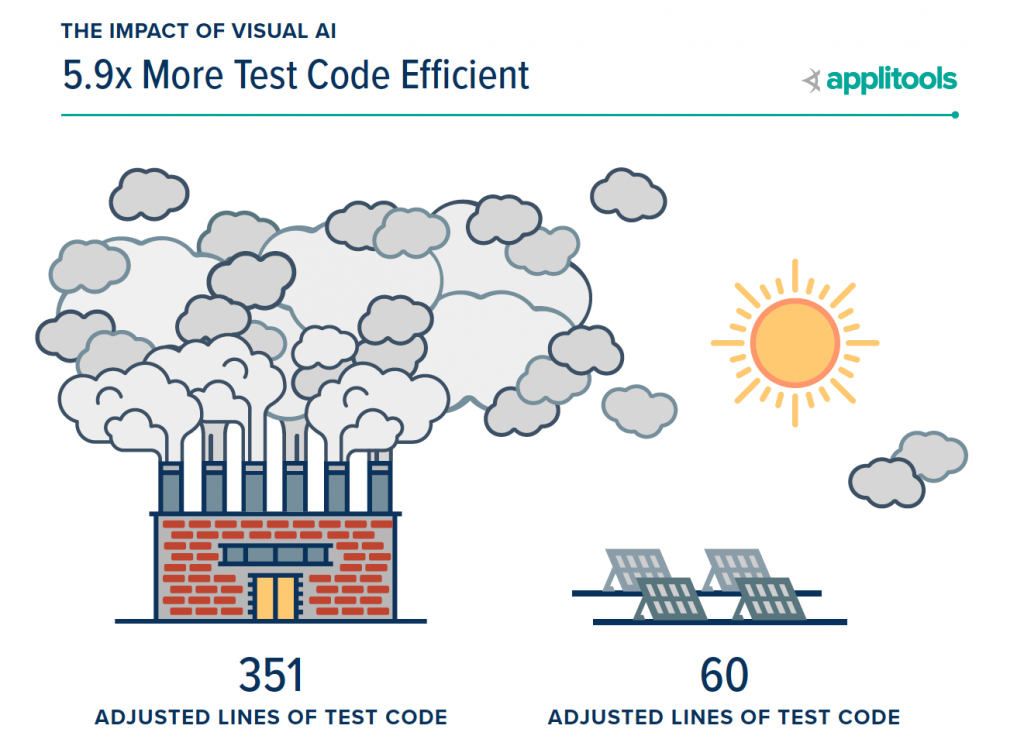

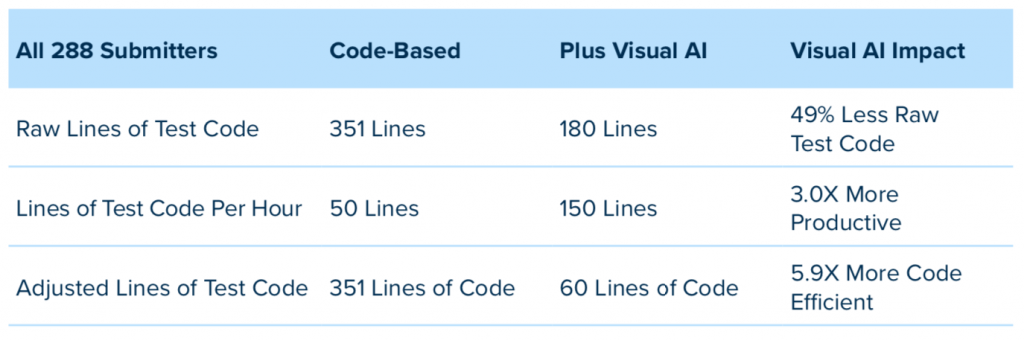

In addition to the standard quality engineering measures of success like test automation quality coverage, speed, stability, and maintainability – a recently released report “The Impact of Visual AI on Test Automation” defines a new measure called test code efficiency. You can also Ask 288 Quality Engineers About Visual AI if you’re curious about where this data came from. Or, just read on.

Similar to the concept of “code efficiency” that feature developers look for in their work, test code efficiency measures both:

- The number of lines of test code a quality engineer can write per hour and

- The amount of coverage each line of code provides.

Visual AI, which captures images of an entire page with a single open-ended line of code

eyes.checkWindow();

is much more efficient because it is so easy to write, easy to maintain, and each individual line of code provides both functional and visual coverage that code-based only approaches lack because they rely on closed-end assertions that test before the page is fully rendered. Said another way, Visual AI looks at the app the same way your customers would with their own eyes. Humans don’t look at an application and see DOM objects, locators, and text labels — they see a fully rendered page that needs to look and function to suit their needs.

Code-Based Inspection – Trading Off Efficiency for Excellence

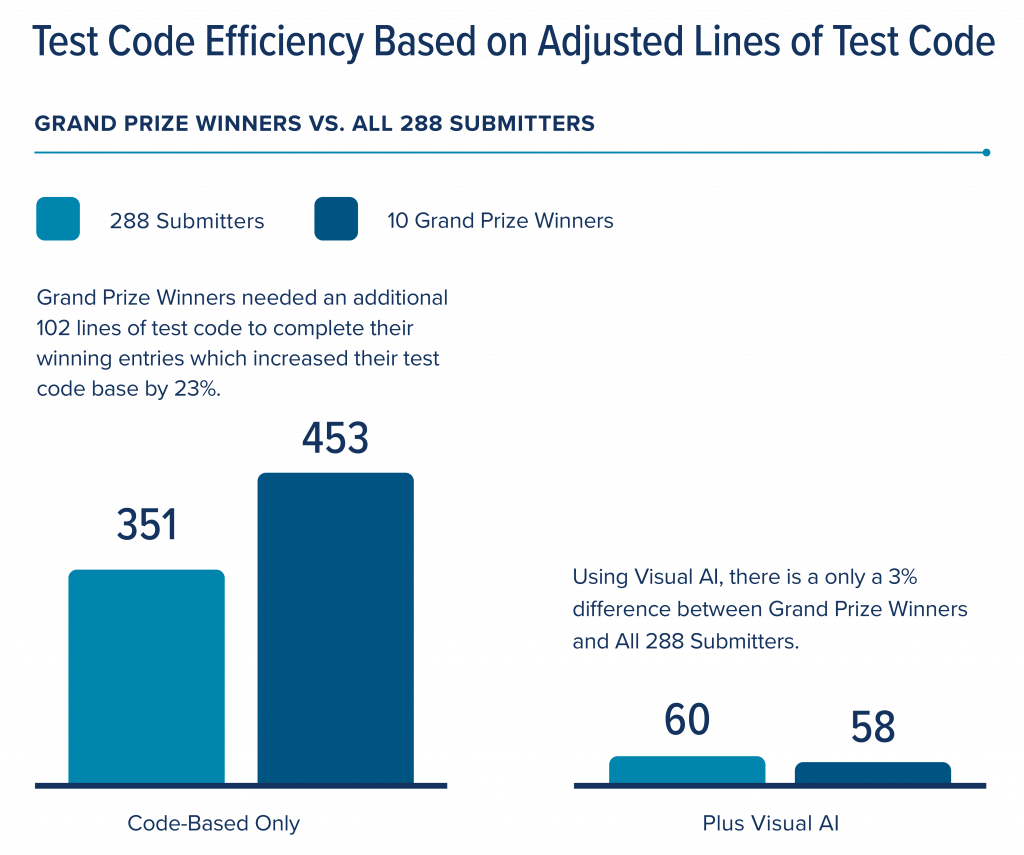

Before going on, a brief explanation to help you understand Visual AI’s impact on test automation in more depth. In creating the report, we looked at three groups of quality engineers including:

- All 288 Submitters – This includes any quality engineer that successfully completed the hackathon project. While over 3,000 quality engineers signed-up to participate, this group of 288 people is the foundation for the report and amounted to 3,168 hours, or 80 weeks, or 1.5 years of quality engineering data.

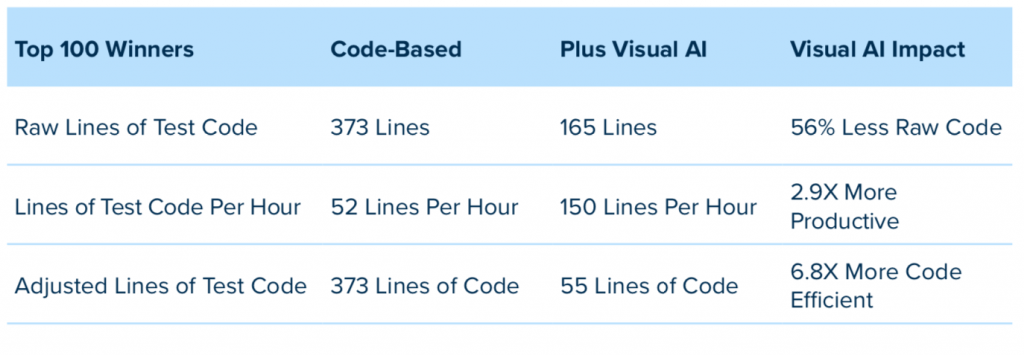

- Top 100 Winners – To gather the data and engage the community, we created the Visual AI Rockstar Hackathon. The top 100 quality engineers who secured the highest point total for their ability to provide test coverage on all use cases and successfully catch potential bugs won over $40,000 in prizes.

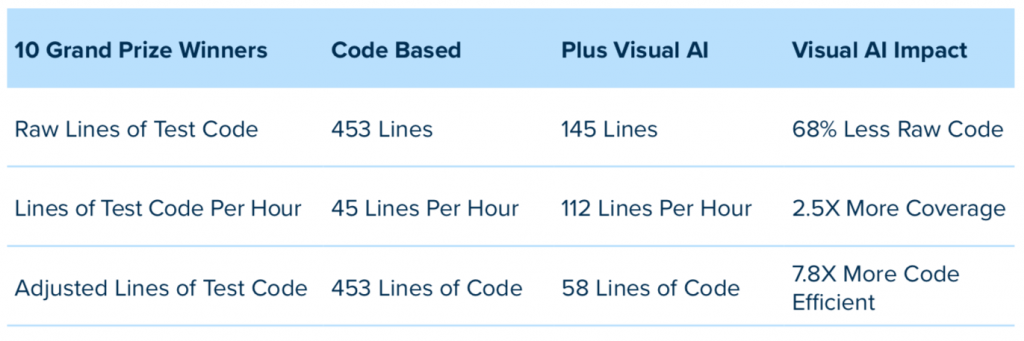

- Grand Prize Winners – This group of 10 quality engineers scored the highest representing the gold standard of test automation effort.

By comparing and contrasting these different groups in the report, we learn more about the impact of Visual AI on test code efficiency.

What really stands out here is the fact that Grand Prize Winners, when using a code-based approach exclusively, needed an additional 102 lines of adjusted test code to complete their winning entries. That’s a 29% expansion to our code-based framework really well and cover 90% or more of potential failure modes. In the real world, we simply don’t have the time to write 351 lines of code very often, much less 453. Especially knowing we then have to maintain these suites indefinitely to support our tests going forward. That’s the very definition of inefficient.

Contrast code-based coverage with Visual AI results. Using Visual AI, all testers achieved 95% or more coverage in, on average, with just 60 lines of adjusted test code as compared to 315. Even better — It took the Grand Prize winners just 58 lines of adjusted code to achieve 100% coverage using Visual AI. This trend continues when you compare the data across all 3 groups like we did here:

In Conclusion

With just a few key tips on the optimal use of Visual AI, test teams can enjoy a 6x to 10x improvement in test code efficiency.

By vastly reducing the coding and code maintenance needed to inspect the outcome from each applied test condition, and, at the same time, increasing page coverage, testers reduce effort and increase their productivity. This gives testers the time to both increase test coverage significantly, yet still complete testing orders of magnitude faster after adding Visual AI. This ability is vital to alleviating the testing bottleneck that remains a barrier to faster releases for most engineering teams.

So, what’s stopping you from trying out Visual AI for your application delivery process? Download the white paper and read about how Visual AI improved the efficiency of your peers. Then, try it yourself with a free Applitools account.

James Lamberti is CMO at Applitools.