How do you test a design system? You got here because you either have a design system or know you need one. But, the key is knowing how to test its behavior.

Marie Drake, Principal Test Automation Engineer at News UK, presented her webinar, “Roadmap To Testing A Design System”, where she discussed this topic in some detail.

Marie is many things. In addition to her work at News UK, she is a Cypress Ambassador and organizer of the Cypress UK community group. If you want to know more about using Cypress, she’s a great speaker. In addition, she blogs about testing and tech at her own blog, mariedrake.com.

This post summarizes her webinar and highlights some of the key points.

Who Is News UK?

News UK is the UK subsidiary of News Corp, the large global publishing and media company. Marie’s team supports the sites that develop and deliver online versions of The Sun, The Times, and The Sunday Times. They also run the Wireless media site. Marie supports the various development teams that deliver news and information that change regularly.

Why A Design System At News UK?

Think of a design system as building blocks. A design system provides a repository for design components used to construct your web application. Or, more precisely, applications. By using a design system, you can eliminate redundant work across different parts of your web application.

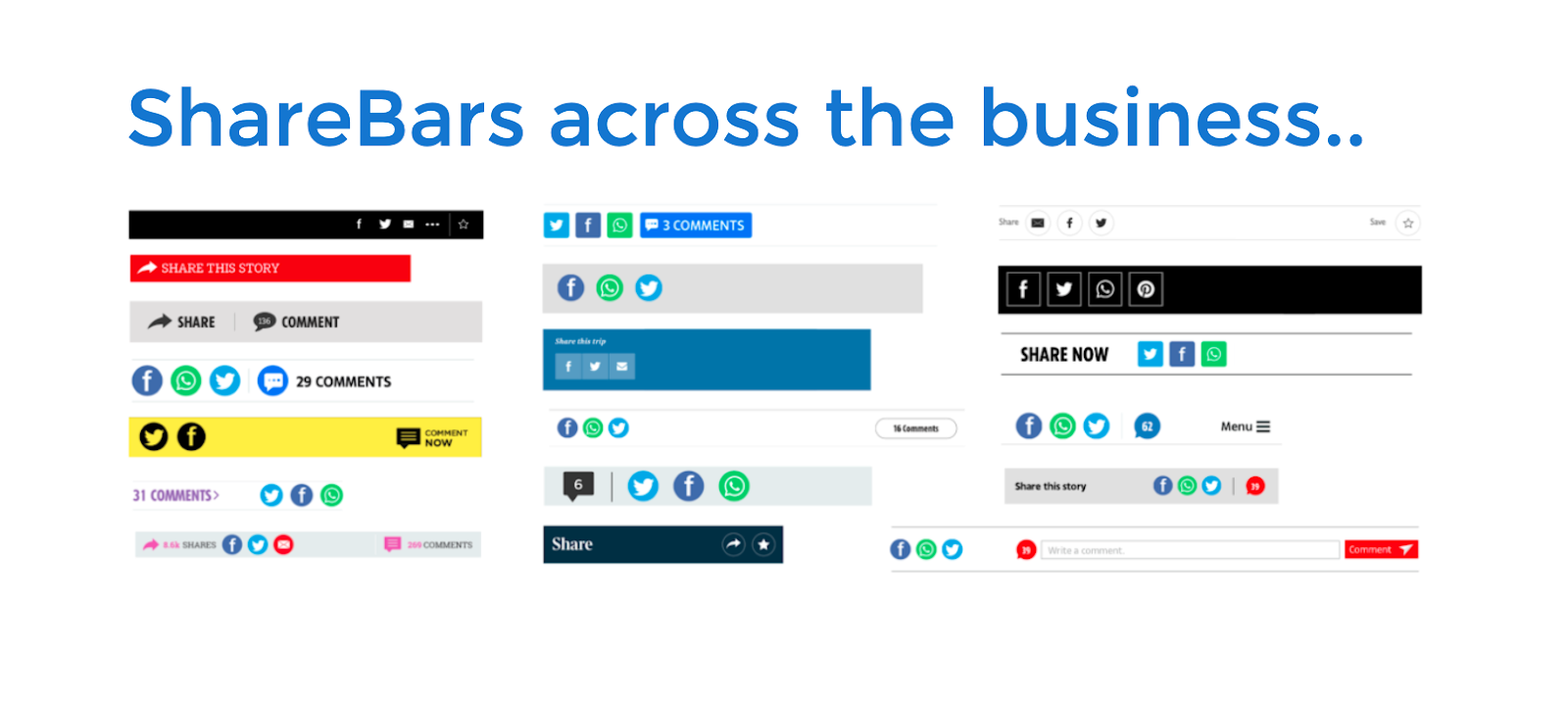

Marie gave the example of “share bars” at News UK. Share bars let you share content to social networks like Twitter, Facebook, Instagram, and WhatsApp. You likely have seen share bars on blogs or media pages. Inside News UK, design groups had coded their own share bars. They found19 different share bars in use across different parts of the News UK business.

The implication of lots of redundant code written by different people involves the cost of maintenance. Sure, having 19 different teams write 19 different parts of the app sounds like great division of labor. But, when you get 19 different sound bars – how do you maintain them? How do you choose one for your next part of your web business? What happens when you decide to resize the share bars across your site?

Fast Coding Does Not Equal Agility

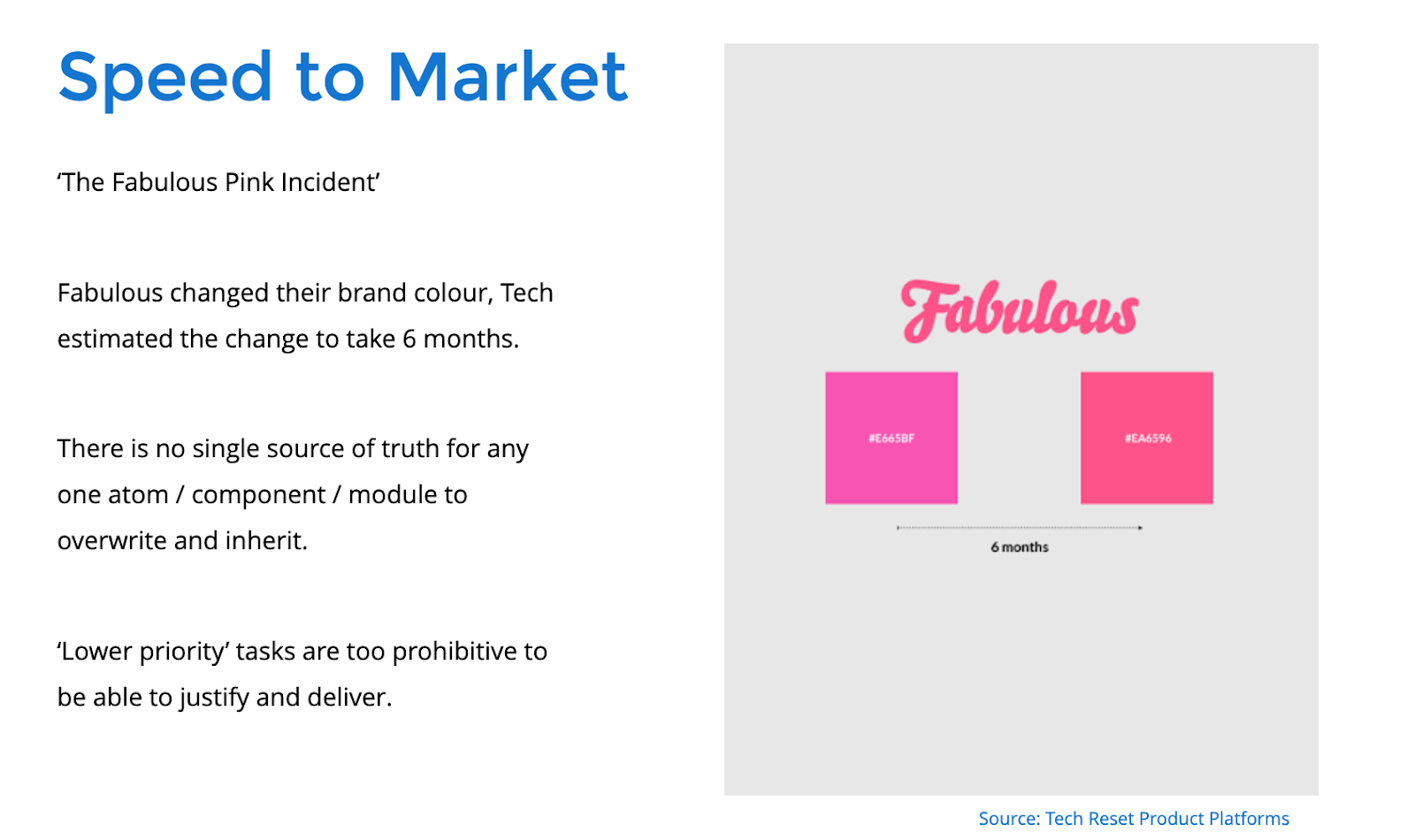

Marie showed an even more problematic example of a web business, Fabulous, that wanted to change their brand color from #E665BF to #EA6596. When engineers looked at the potentially impacted code and the areas requiring post-change validation, they estimated the change would take six months. Half a year for a color change?

The coding effort at Fabulous involved two code bases. First, they had the website that needed to be updated. Second, they had the tests that needed to be updated to match the new site. A large part of the test change – even with no functional or other visual change – just required code inspection to ensure that the desired branding color change had been applied as expected across the entire site.

Marie’s seven-plus years in software quality led her to understand that raw coding speed rarely correlated with agility. Here, “agility” means something different from “agile.” In software, agility comes from the ability to make quick changes and have confidence on both their impact on intended behavior and avoiding unexpected changes. While many software developers can write code quickly, few write thoughtfully in ways that make code maintainable – especially across the entire site.

Benefits of the News UK Design System

In describing the design system deployed by News UK, Marie quickly pointed out its benefits.

- Cost efficient. Once you set up a design system, you have standardized building blocks for building your site. If you can use the design system to customize, your teams can consume the building blocks instead of rewriting from scratch. And, you reduce software maintenance costs.

- Reusable. A good design system allows you to re-use code.

- Speed To Market. As mentioned in the section on agility, the design system reduces the amount of code you need to write from scratch. It also reduces the amount of code you will manually change as you make updates.

- Scalable. A good design system lets multiple users access the system – making the developers much more efficient.

- Standard Way Of Working. With a design system, you standardize the process of writing code. You can help new people get up to speed on existing code and simplify the code maintenance process.

- Consistency. In the end, you can look to the design system to ensure consistent behavior (visual, functional) from your applications.

Marie showed a loop of the design system at News UK. The components get developed and maintained in Storybook. Developers can grab elements and add them into applications being built. The playground feature in Storybook makes it easy for developers and designers to play with Storybook components to mock up the functioning web application before it gets built.

As Marie pointed out, consistency in the components simplifies both development and testing.

Testing A Design System – Requirements

If a design system should make code easier to create and maintain, how do you test a design system?

Marie started outlining the testing requirements developed by News UK.

- Test different components easily. Expect the system to mature and develop over time. Some components will be entirely visual, and some may include audio. Make sure all this works.

- Test cross-browser. News UK needed this capability as they knew their content got consumed on mobile devices and a range of platforms.

- Visual Tests – Write visual tests with less maintenance in mind to reduce impact on testing workflow and speed the process of testing small changes that touch lots of components.

- Deliver a high-performing build pipeline – build plus test concludes within 10 minutes

- Integrate design review earlier in the process to improve collaboration, find misunderstandings and differences between design and development early in the process.

- Test for accessibility on both the component and site level for all users.

- Catch functional issues early.

- Have all tests written before deploying a feature. There are 2 full-time QA engineers on the Product Platforms team, so they need to share QA responsibility with developers.

Testing A Design System – Strategy

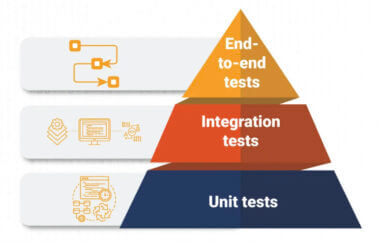

From here, Marie outlined the strategy to run tests of the design system.

- First, unit testing. Developers must write unit tests for each component and component modification.

- Second, snapshot testing. Capture snapshots and validate the status of component groups.

- Third, component testing. All components need to be validated for functionality, visual behavior, and accessibility.

- Fourth, front-end testing. Make sure the site behaves correctly with updated components. Validate for functionality, visual behavior, and accessibility.

- Fifth – cross-browser tests. Ensure there are no unexpected differences on customer platforms.

Testing A Design System – Challenges

Marie described some of the challenges with different test approaches.

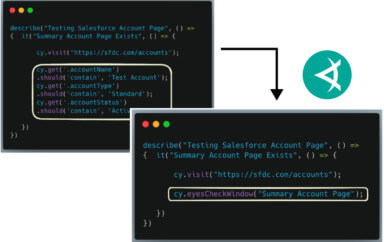

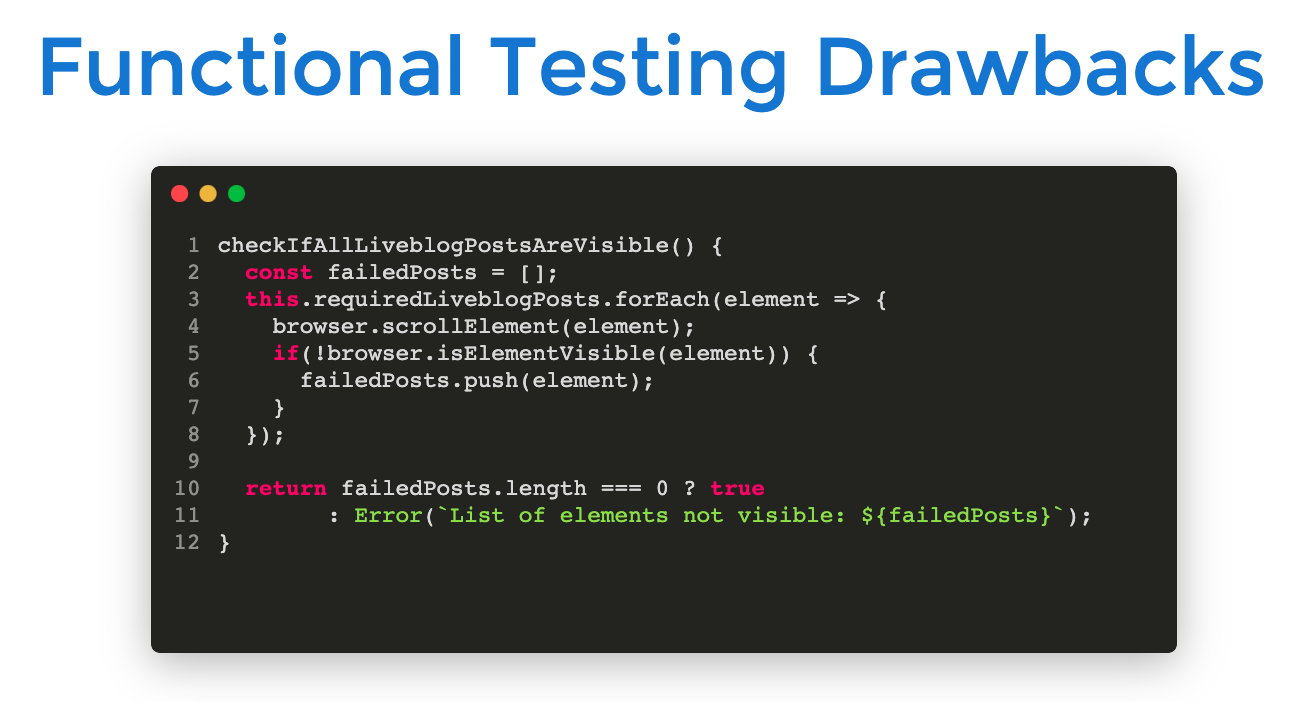

Purely functional tests can include lots of code. Marie’s pseudocode shows this problem. The more comprehensive your functional tests, the more code that exists in those tests. Assertion code – the code used to inspect the DOM for visual elements – becomes a burden for your team going forward.

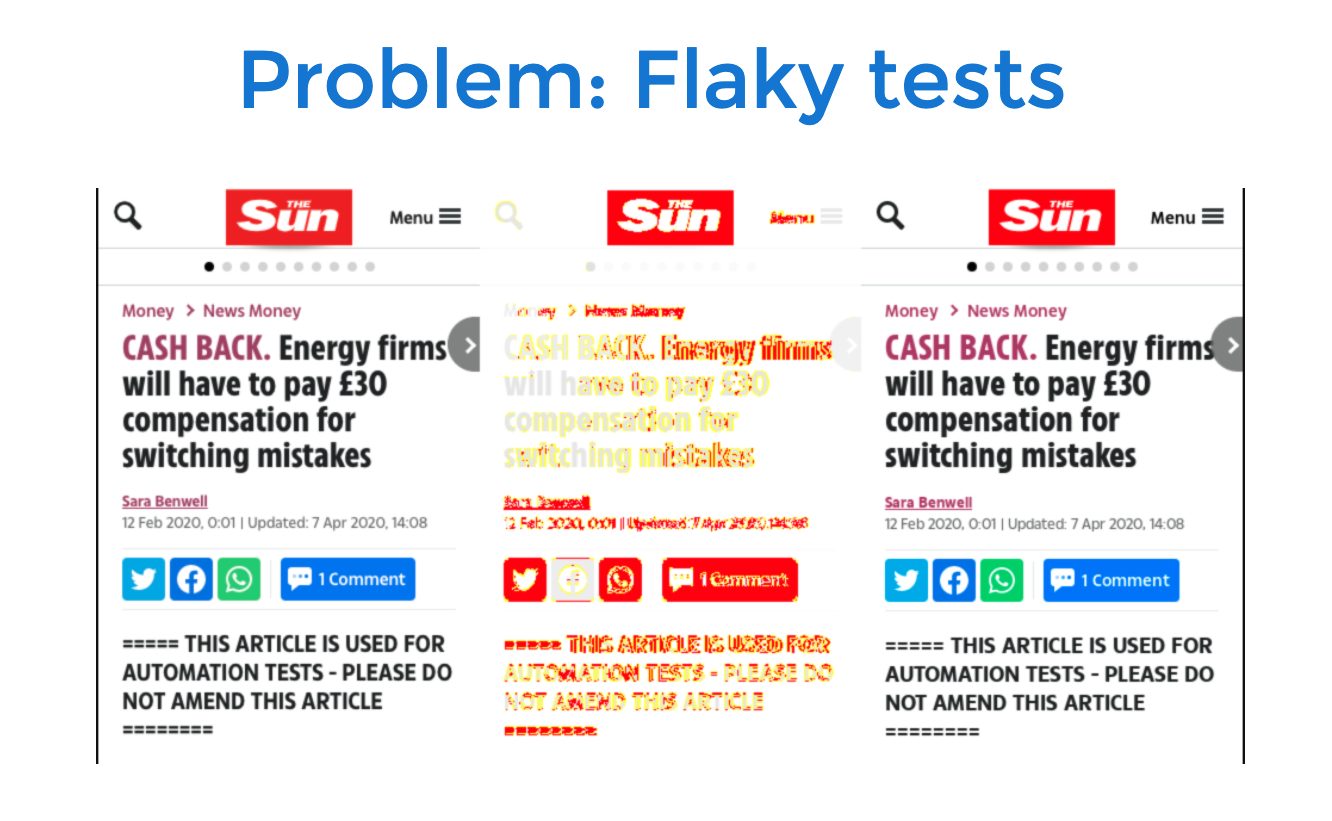

Visual testing serves a strategic function, except that most visual testing approaches suffer from inaccuracy. Marie showed an example of a “spot-the-differences” game, which highlighted the challenges of a manual visual test. Then, she showed pixel differences, which she found become problematic on cross-browser tests. From a user’s perspective, the pages looked fine. The pixel differences highlighted differences that, after time-consuming inspection, her team judged as inconsequential pixel variations.

Another visual testing inaccuracy Marie described involved visual testing of dynamic data. On news sites, content changes frequently as news stories get updated. When the data changes, does the visual test fail?

Marie and her team had chosen to use available open-source tools for visual testing. Marie showed some of the visual errors that got through her testing system. These had passed functional tests but weren’t caught visually.

So, Marie and her team discovered that their existing tests let visual bugs through. They knew they needed to solve their visual testing problem.

Choosing New Tools

Marie’s team looked at three potential solutions to their visual testing problem: Screener, Applitools and Happo. After putting all three through their paces, the team settled on Applitools for accuracy. Being way more accurate helped Marie write up the use case for News UK to purchase a commercial tool instead of adopting an open-source solution.

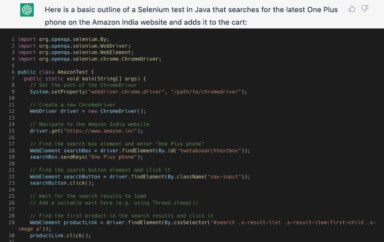

The team also looked at UI testing tools. They looked at Puppeteer, Selenium, and Cypress for driving web application behavior. As a team, they chose Cypress. They could have used any of these tools with Applitools. Marie’s team chose Cypress because its developer-friendly user experience made it easy for developers to write and maintain tests.

The final test suite included:

- Jest for managing test flow

- Cypress for running tests

- Applitools for visual tests

- Google Lighthouse and Cypress AXE for accessibility testing

- WAVE for testing Doc accessibility

- Safari Voiceover for sightless test validation

Using Applitools

Next, Marie shared the approach her team used for deploying Applitools.

Prior to using any part of Applitools, the team needed to deploy an API key. This key, found on the Applitools console, permits users the access the Applitools API. Once read into the test environment, the key grants the tests access to the Applitools service.

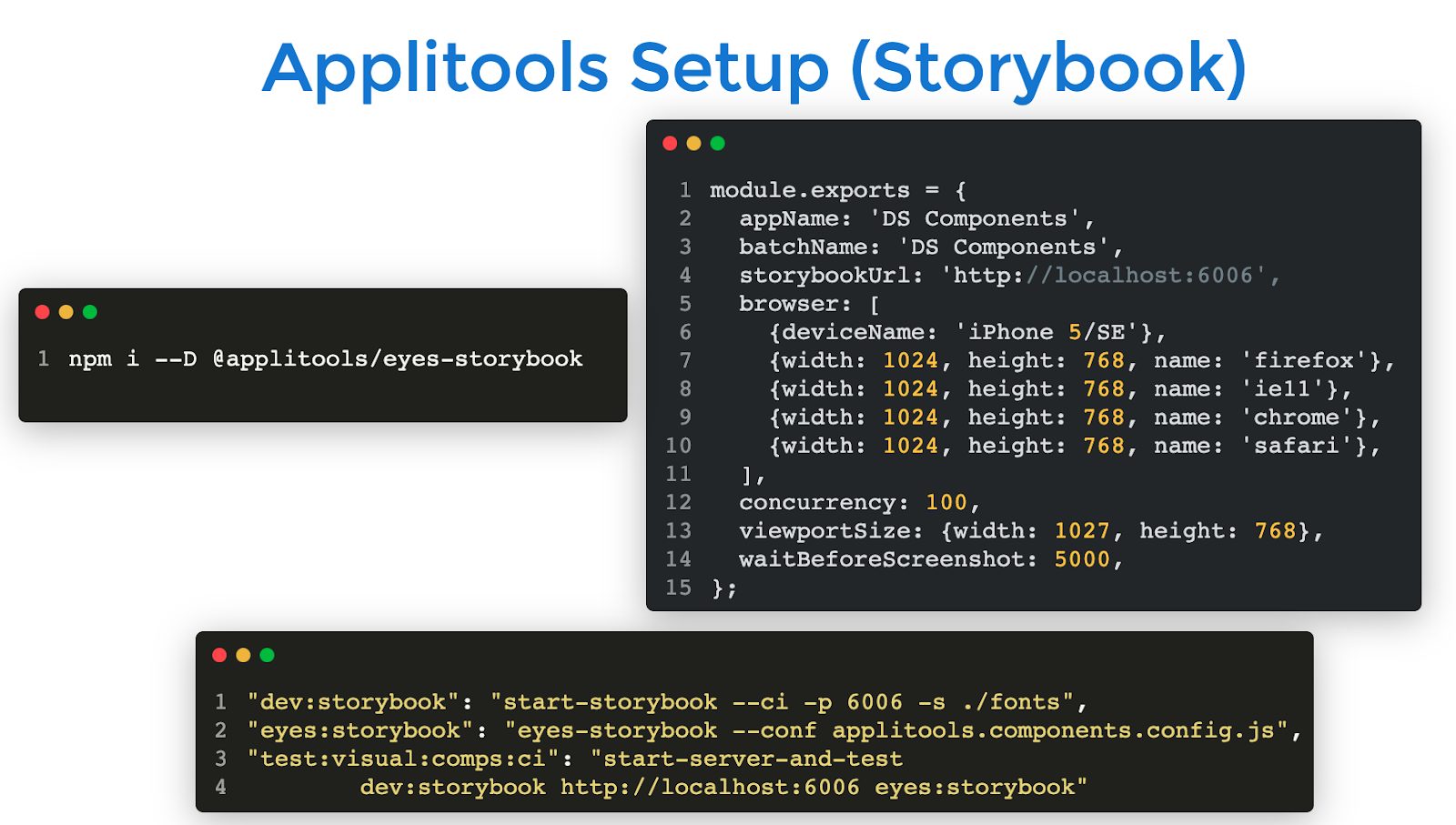

The team needed to add the Eyes code to Storybook for component tests and to Cypress for the site-level tests.

Component Tests

Next, Marie demonstrated the code for validating the Storybook components. The tests involved cycling through Storybook and having each component captured by Applitools. Individual component tests either matches in Applitools, or showed differences. The test team would walk through the inspected differences to either approve the changes and update the baseline image with the new capture, or rejected the change and send the component back to the developers.

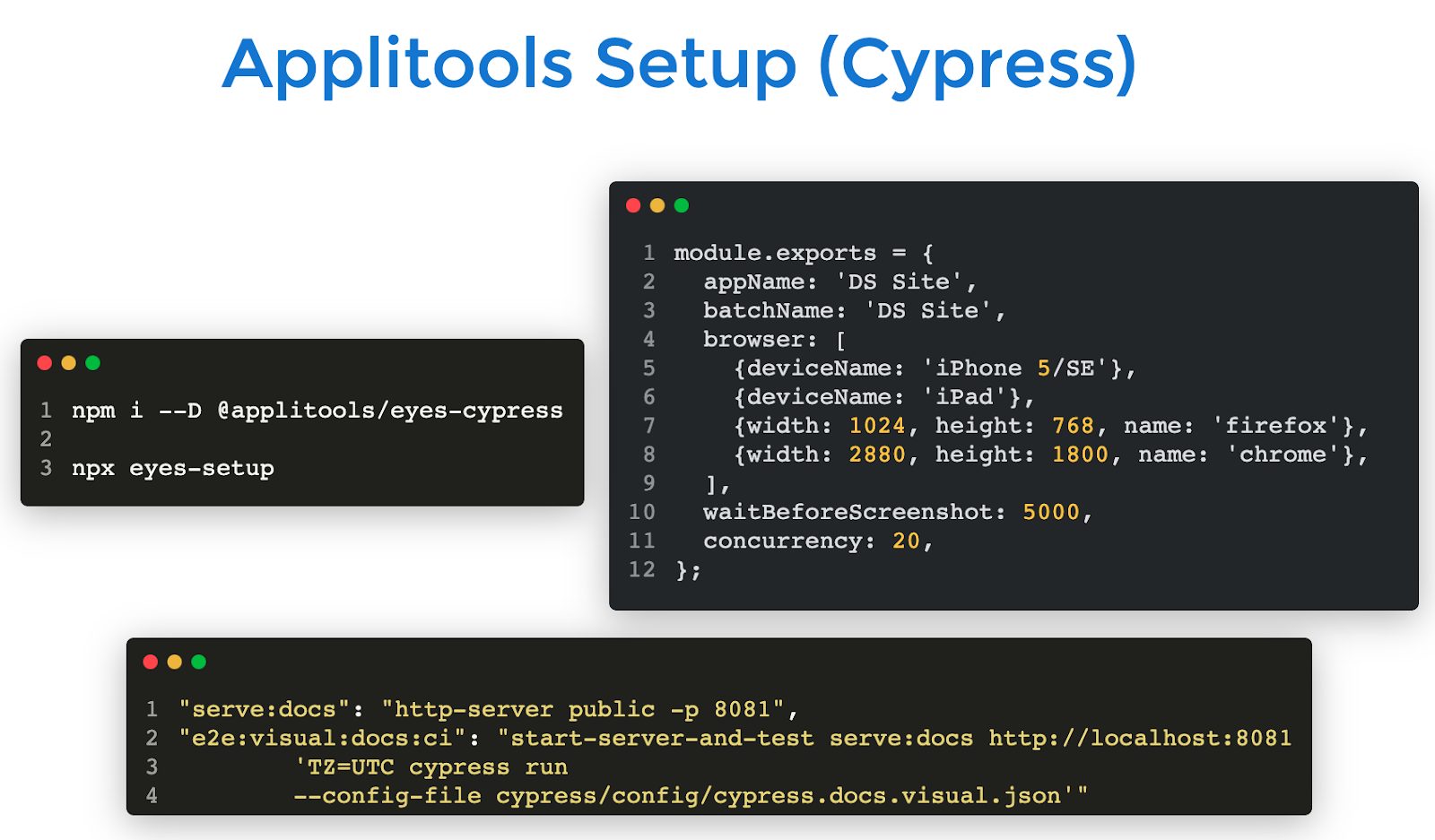

Cypress Tests

Similar to the component tests, the Cypress tests integrated Applitools into captures of the built site using the new components. Again, Applitols compared each capture against the existing baseline to find differences.

For Marie’s team, one great part about using Applitools involved the built-in cross-browser testing using the Applitools Ultrafast Grid. Simply by specifying a file of target browsers and viewport sizes, Applitools could automatically capture images for the targets separately and compare each against its baseline.

Auto Maintenance

Marie talked about one of the great features in Applitools – Auto Maintenance. When Applitools discovers a visual difference, it looks for similar differences on other pages captured during a test run. When an Applitools user finds a visual difference and approves it, Auto Maintenance lists the other captures for which the identical difference exists. The Applitools user can then batch-approve the identical changes found elsewhere. A single user, in a single step, can approve site-wide menu, logo, color, and other common changes all at once.

Handling Dynamic Changes

Another benefit of Applitools involves pages with dynamic data. In addition to the example of news items updating regularly, Marie showed an example of the new Internet radio service offered by News UK. The player page can sometimes show different progress in a progress bar during different captures, depending on data being read when taking a screen capture.

Applitools has a layout mode that ensures that all the items exist in a layout, including the relative location of the items, but layout mode ignores content changes within the layout.

Accessibility Tests

Next, Marie talked about accessibility tests.

Marie demonstrated component accessibility testing with Cypress AXE. She showed that, once integrated with Cypress, AXE can cycle through components. Unfortunately, AXE and other automated tests uncover only about 20% of accessibility tests.

Lighthouse and other tests get run manually to validate the rest of visual accessiblity.

She also showed the Safari screen reader accessibility testing.

Workflow Integration

Marie then described workflow, and how the workflow integration mattered to the Product Platforms team.

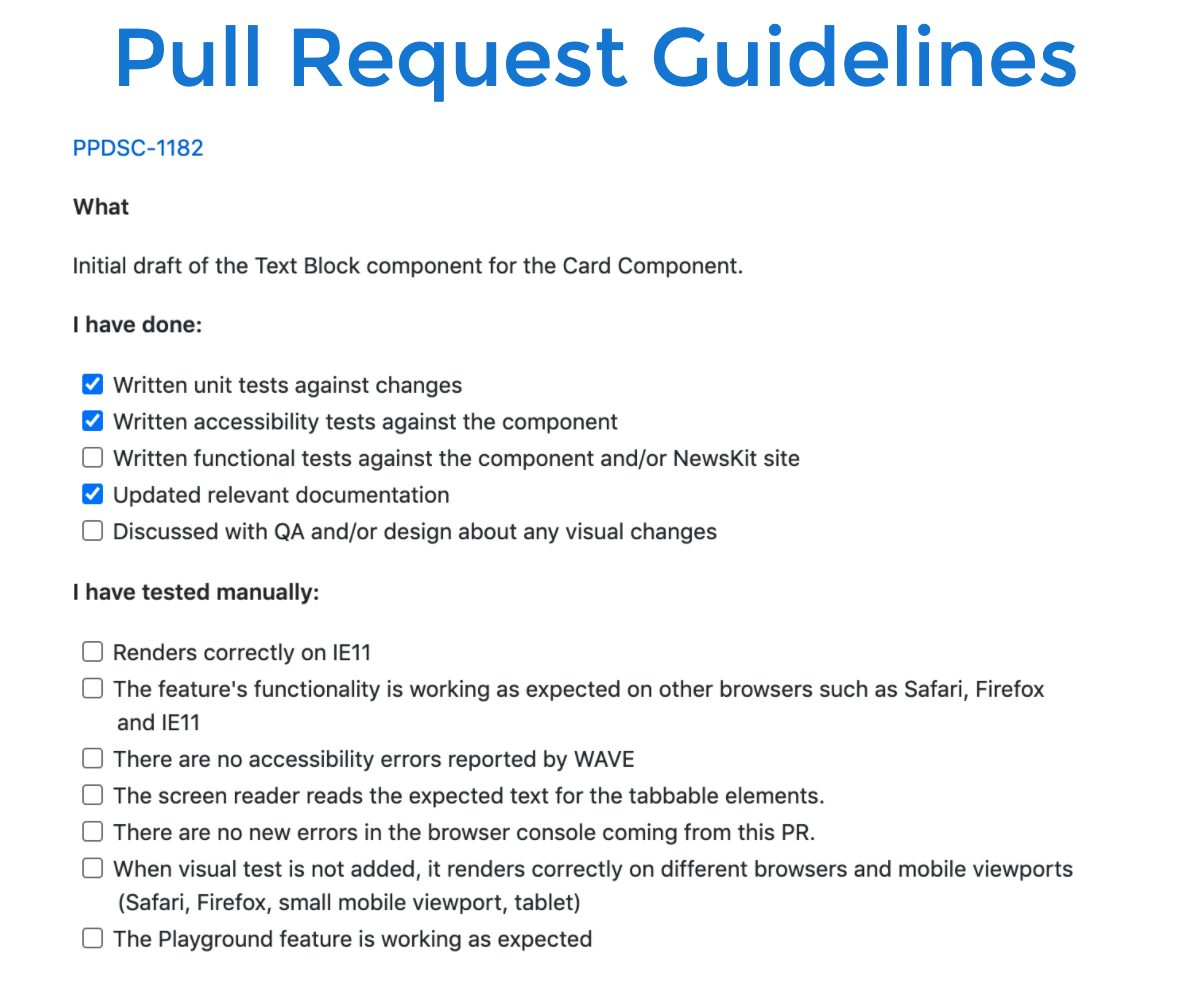

She made the team’s first point – quality is everyone’s responsibility. For the product platforms team, the two quality engineers serve as leads on approaches and best practices. Developers must deliver quality in the design system.

To accentuate this point, she explained that the team had developed pull request guidelines. Check-ins and pull requests required documentation and testing checklist of unit, component, and page-level tests. Everyone agreed to this level of work for a pull request.

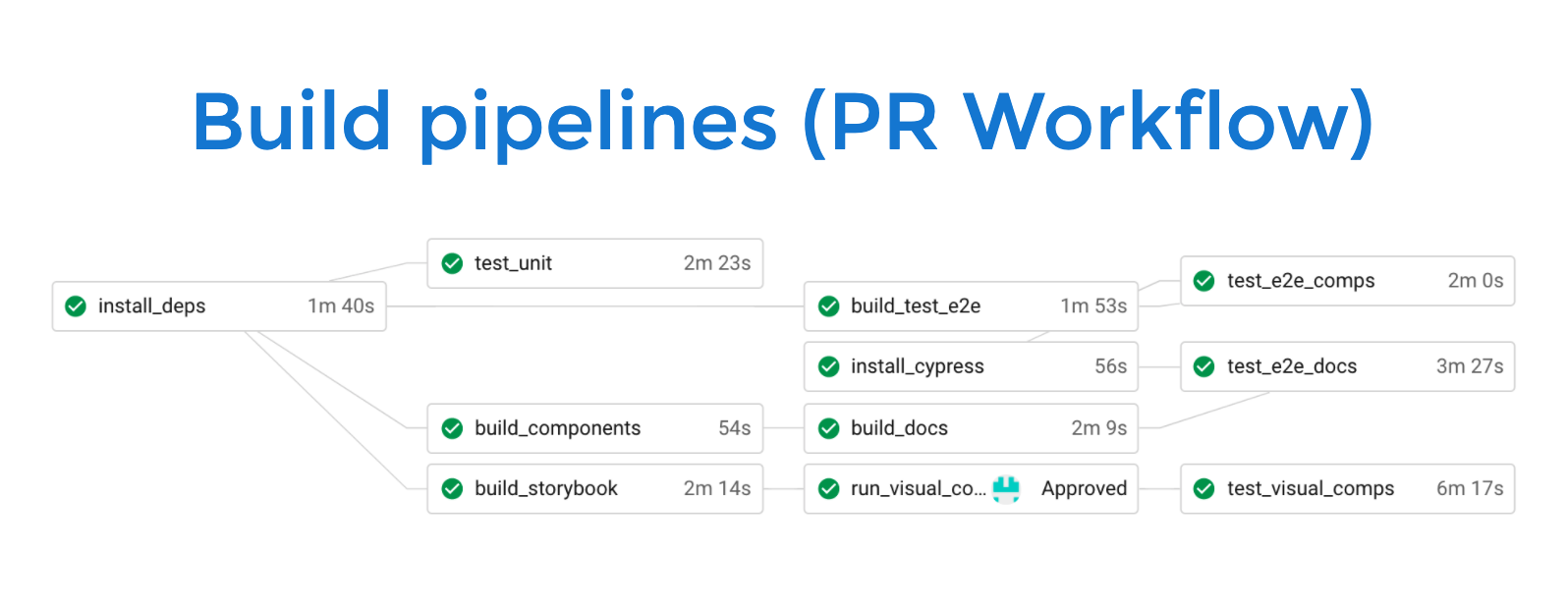

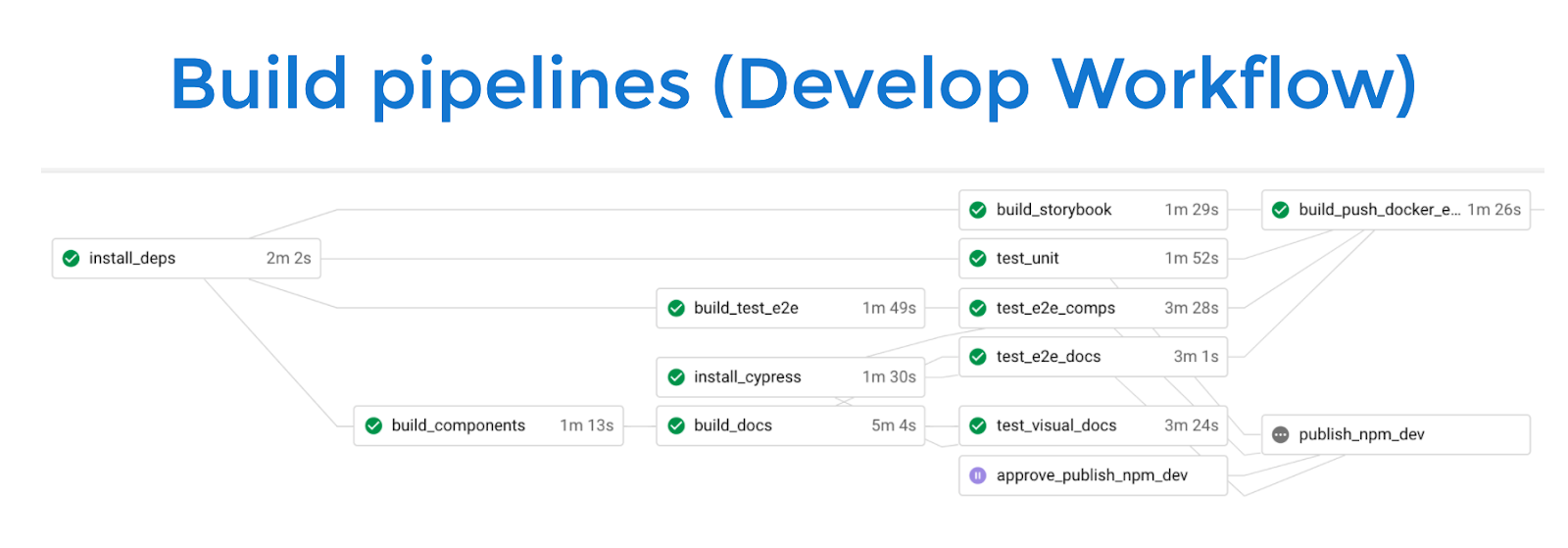

Next, Marie showed the workflow for a pull request. Each pull request at the component level required a visual validation of the component before merging. She explained how Applitools could maintain separate baselines for each branch and manage checkpoints independently. Then, she showed the full develop workflow build pipeline.

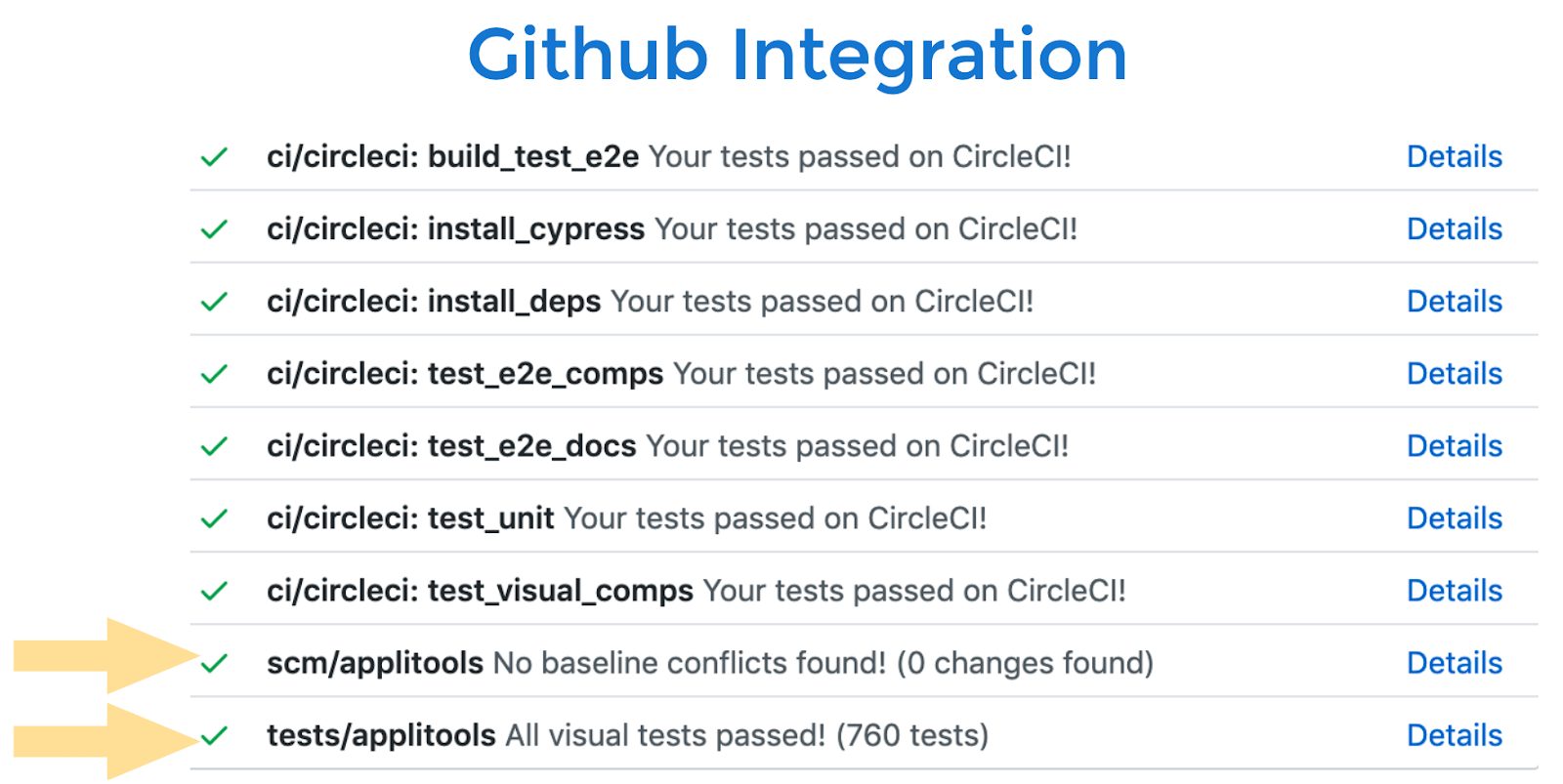

Finally, she showed how Github integration linked visual testing fit into the entire Circle CI build. She also showed how the buld process linked to Slack, so that the team could be notified if the build or testing encountered problems. The build, including all the tests, needed to complete within 10 minutes.

Overall Feedback

Marie provided her team’s general feedback about using Applitools. They concluded that they required Applitools for visual validation of the component-level and site-level tests. Developers appreciated how easily they could use Applitools with Cypress, and how they could run 60 component tests in under 5 minutes across a range of browsers. The design team also uses Applitools to validate the design, and they found the learning curve was fast for figuring out the visual elements.

As users, they did have feedback for improvement to share with the Applitools product team. One of the most interesting came from the design team, who wondered if they could use UI design tools (Sketch, Figma, Abstract, etc.) to seed the initial baseline for an application.

Beyond Applitools, the accessibility testing has helped ensure that News UK can deliver visual and audio accessibility for their users.

Conclusion

Marie Drake made a strong case for using a design system whenever there are multiple design and development groups working independently on a common web application. The design system eliminates redundancy and helps speed the rate of change whenever groups want to roll out application enhancements.

She also made a strong case for building testing into every phase of the design system, from component-level unit, functional, visual, and accessibility tests all the way to page-level tests of the same. For testing speed, testing accuracy, ease of test maintenance, and cross-browser tests, Marie made a strong case for using Applitools as the visual test solution for the News UK design system.

For More Information

- How Do You Simplify End-To-End Test Maintenance?

- Measure Your Test Automation Maturity

- Adding Log Analysis to QA Will Improve Your Processes

- How Do You Catch More Bugs In Your End-To-End Tests?

- How Can 2 Hours Now Save You 1,000 Hours In The Next Year?

- Request an Applitools Demo

- Sign up for a free Applitools account.