As a kid in the 70s, I loved The Six Million Dollar Man. Many of us know the story: Colonel Steve Austin, astronaut, has a catastrophic crash and is rebuilt as part man, part machine. The world’s first bionic man. As the show’s intro stated, he was rebuilt “better than he was before, better, stronger, faster”. Cue very cool 70’s intro music.

Public service announcement: faster isn’t always better. Sometimes, faster is more problematic. Sorry, Steve; my inner 7-year-old is very, very sorry.

Allow me to explain. When implementing automation, speed is often at the top of mind; the typical refrain is something akin to “automation is going to help us go faster, in part, because computers are faster than humans”. This is generally true. Computers can calculate values, send input to text fields, click buttons, and build payloads all faster than we humans can. So, yes, test scripts often do perform many steps faster than a human performing those same steps. As an aside, sometimes computers are not faster, but that’s another show.

It’s important to note that an application’s GUI is designed to be used by human users, not by computers executing automated scripts, testability and automatability notwithstanding. It’s also important to note that humans interact with GUIs much differently than automated scripts do. Humans “notice things” and generally perform steps at a slower rate than a computer; conversely, computers can’t notice things, so they have to be told how to perform steps with very specific instructions, but they generally perform steps at a higher rate than a human. This difference in behavior can introduce challenges to creating automated scripts for testing because those scripts must explicitly wait for events that a human user doesn’t have to consider simply because the human processes events differently than a script. Again, humans notice things.

Fortunately, we have some solutions to handle many of these cases when automating. As I described in this previous post, our primary and generally preferred construct for handling waiting is the polling wait; the previous post provides additional information on polling waits. And that’s the way we do things, right? Polling waits all around! I’m sure it’s the Nth automation commandment or something: thou shalt not use a hard wait! I’ve lived this for many years, but I’ve come to believe that I’ve over-rotated hard waits.

Recently, I was on a project where I was rehabilitating some existing test scripts. The previous authors weren’t used to working with dynamic web pages and the scripts were suffering from intermittent failures because the timing had changed, and the scripts weren’t waiting for elements to disappear before proceeding to subsequent steps. I spent a lot of time adding polling waits and changing the conditions for some existing polling waits. I was pretty successful in increasing the stability of the automated smoke test scripts, but I was really stuck in a few places. I tried waiting on various existence, visibility, and display attributes, all to no avail.

What I noticed was that it seemed to take these problematic elements some additional time to actually be ready to interact with, even though these elements were displayed and enabled. I would have understood all of this and chalked it up to me not using an appropriate check of “clickability”, but the call to the Click method did not cause an error; as far as Selenium was concerned, the click was successful, but the expected response of the click never occurred.

I spent even more time trying to tweak the polling waits to no avail. During my investigation I inserted several hard waits to determine if timing played a factor; this is a common way to debug timing issues. Lo and behold, the hard wait allowed the click to consistently behave as expected.

So, what to do? On one hand, if am going to use a hard wait, why bother with the code looking for “clickability”? On the other hand, the hard wait made me unhappy with myself but it felt like spending more time to possibly find a solution that was not based on a hard wait might not be valuable. The solution? I decided to be responsible. I would leave the check for “clickability” in place so that we could exit that check as soon as Selenium deemed the element to be clickable. I would then supplement that check with a short hard wait. Based on my experiments, a 250-millisecond pause was a long enough delay between Selenium deeming the element clickable and that specific element actually being clickable.

How did I get past my distaste for the hard wait? Well, that goes back to who the intended user of this GUI was going to be, i.e., a human. If the test script is failing, consistently or inconsistently, because it’s doing things that a human physically can’t do, I don’t think it’s irresponsible to make the script behave a bit more like a human. In the case of this specific situation, making the script behave more like a human means slowing down execution by adding a hard wait before performing the click.

Logically, a question that could follow from this approach is this: if a short pause before a click in one context is OK, what’s the harm in making all clicks have that short pause? Even better, why not make all actions have a pause between when a tool indicates that the element is ready to receive a stimulus and applying that stimulus? The simple answer is scale.

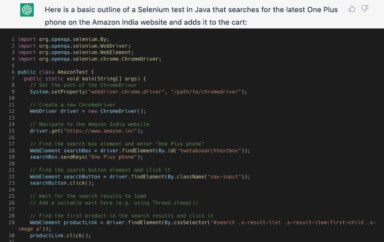

Take, for example, a hypothetical but typical login scenario. An automated test script for logging in might look like the following:

Browser.LoginLink.Click

Browser.EmailAddress = “foo@bar.com”

Browser.Password = “mypassword”

Browser.LoginButton.Click

Assert(Browser.LogOutButton.Exists);

If we were to add a 250-millisecond pause to each action in the above pseudocode, we would add 1.25 seconds to each login; there are 5 actions in the above code: 2 clicks, 2 typing, 1 existence check. That doesn’t sound like such a big deal, but let’s assume we have 1000 test scripts, each of which logs in at least once. That adds over 20 minutes to the automation run when running sequentially. But we run in parallel, you say? Awesome! Let’s suppose we are running 20 test scripts in parallel. That’s an additional 1 minute of execution duration, but only for logging in! When we think about how many subsequent actions we have in each test script, we can quickly see that the added duration can be intolerable for most organizations. It generally makes more sense to add a hard wait only in those cases where it is required.

It’s important to note that this is not carte blanche to use hard waits when polling waits would be more appropriate; the traditional caveats apply when using hard waits. As shown above, the overuse of hard waits can increase test script execution duration; perhaps that’s a problem and perhaps that’s not, but it depends on your context. Additionally, even in the specific scenarios described above, we still need to experiment with the wait’s duration. The advantage in using them in this relatively narrow way is that we are typically changing the durations by hundreds of milliseconds, not tens of seconds, and only in select situations. If we do find ourselves getting into the multi-to-tens-of-seconds of hard wait times, we should reevaluate whether a polling wait is more appropriate for that specific case.

Like this? Catch me at an upcoming event!