When you run your automated tests you have an expectation: if there is no bug, the tests will pass; otherwise the tests will signal the presence of the bug by failing. However, sometimes, if you sit and look at the test automation execution, you will be surprised. You will notice that the tests pass, but they miss reporting that there is a bug. You can see the bug clearly, but the tests are not picking up on its presence. Here are 4 times when this can happen, and what you can do about it.

1. When you don’t try the try or catch the catch

The most frequent situation when the automation passes, but it does not pick up on the existence of the bug, is when a try/catch block is used. How does this happen: the test (and tester who writes the test) expects for an Exception to be thrown, and forgets to treat the case when the Exception is not thrown.

Let’s take a look at an example: let’s say the tester expects a NoSuchElementException exception to be thrown when certain Selenium actions are performed. Certain code needs to execute, when the exception is thrown. The tester writes the following code:

This code works perfectly when the expected exception is thrown. However, in case it is not, the test will simply carry on executing the code defined after the try/catch block, if any exists. But what happens when the exception is not thrown? All the code in the try branch executes, and then the execution jumps to code defined after the try/catch block. In case the lack of the Exception signals a bug, an additional condition needs to be added to the try branch:

This way, if all the code from the try block executes successfully up to the fail line, the catch block will not execute. Introducing the fail line makes sure the test will signal that the behavior is not the expected one: the exception was not thrown.

Be mindful when using try catches. Always think about both situations regarding a condition you are evaluating: what happens when the condition does occur, and what happens when it doesn’t.

2. The Exception that hides all exceptions

Another situation where the try/catches can falsely make a test pass is when a ‘too general’ exception is caught, like ‘Exception’ itself. An example can be as follows:

Here, in the try, there is a lot of code. Some of these lines of code can throw exceptions, but each of them throws a different one. The tester does not realize which exceptions can be thrown here, and only expects the NoSuchElementException.

The scenario the tester thought about here is: if the element that throws the exception is present, the following lines of code in the try block will interact with it in some way; if the element is not there, the NoSuchElementException is caught by the catch block, and we just continue with the test. The presence or absence of the element is not a must. However its presence requires further actions.

What the tester does not realize is that when the NoSuchElementException is not thrown, any one of the following ones could be. This would lead to an incomplete scenario execution: maybe the first two lines of code from the try block will run, but not the third and fourth one. What the tester actually wanted was an ‘all or nothing’ kind of execution of the code in the try block. But that did not happen.

The biggest issue with this try/catch block is that the catch branch will hide possible bugs, by catching exceptions the tester did not even expect to be thrown. These exceptions could signal the presence of a bug, but they will never be thrown as they are caught in the catch branch, without the tester realizing it.

In this case it is better to only catch the specific exception the tester expects. Any other one should be thrown, because they will signal an abnormal test execution. Of course, ideally, there should not be a lot of code inside a try/catch block, to avoid any unexpected behavior.

3. If then else if then else if…then…

In some cases, the tests have certain very complex if-else blocks. Ifs have other ifs inside them, else blocks contain other ifs, and so on. Having such nested if blocks will make it very difficult to track what conditions you are actually handling. Usually if you have a simple if block, it can be something like:

In this case, you are evaluating a condition. If the condition is fulfilled, you will perform certain actions. If the condition is not fulfilled, other actions are performed. This is good. However, unfortunately, many tests forget the else branch:

Here, when evaluating a condition, you are only performing certain actions when the condition is fulfilled. What about when the condition is not fulfilled? Does that signal a bug?

Such an example can be: if a certain WebElement which is present on the page has a certain text, click on it. In fact, what the tester wants in this example is to interact with the WebElement only when the text of the WebElement is a particular one. The tester expects the WebElement to be present, with the expected text, and does not even consider what happens when it is not. If the bug represents a different text on the WebElement, this test will falsely pass: the condition is checked – is the text on the WebElement the expected one? If not, no problem, just move on and don’t execute any code (since there is no else branch). Of course when the text is the correct one, the test will again pass. So, in this case, no matter what text the WebElement has, the test will pass.

This is a clear case when the else branch should be defined, and should signal that a bug is present. The expected WebElement does not have the correct text, which probably means its purpose is different than the expected one. Or it could simply be a typo. Either way, the expected condition is not fulfilled, and the tester needs to be notified of this.

As I mentioned, this is a simple if-else block, which when not used correctly, can hide a bug. Now think about that situation where you have a lot of nested if-else blocks. How can you make sure you considered all the scenarios? Did you treat all the ifs correctly, and create their corresponding else branches? Can you even properly understand what the test is doing when you are looking at these blocks?

It is better to simplify things, so you can manage them correctly. Therefore try not to use too many nested if-else blocks. Instead, maybe you can replace them with a different construct, like switch. Or simply analyse them and where possible, separate them into independent blocks.

4. When the test simply does not check for that particular bug

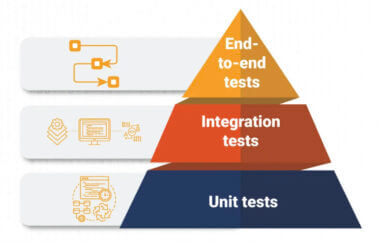

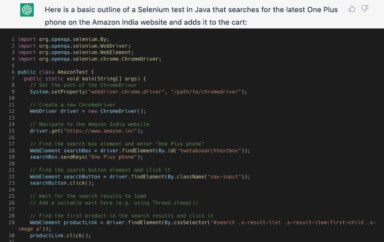

This sounds rather obvious and it really is. When you have functional automated tests, you are performing specific actions, in specific areas, and are checking for specific responses from the system. Certain bugs for which you are not specifically testing can be caught by your automated tests, but some cannot.

For example, if you are using Selenium to test a certain part of a page, you might identify a bug that is functionally not part of what you are testing, but is impeding what you are testing from running correctly. Maybe an unexpected popup is displayed on top of the button you tried to click. This leads to the button not being clickable. In this case, the test will fail and signal that it cannot interact with the element it required.

In another situation, if there is an additional button on the page that should not be there, your test will not pick this up. It is not purposely looking for the presence or absence of this button. It is only interested in another particular button which it requires to interact with. So, the way this test is created, it cannot detect changes in the page layout.

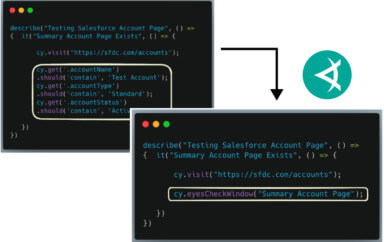

For such situations, where changes in the page layout represent a bug, to make sure you fail when the layout is incorrect, you should use Applitools for visual testing. Combining these two (your functional test code and Applitools), you get a more powerful test. It properly detects functional bugs (in the parts of the page you are testing) and visual bugs across the entire page.

Bonus

Well this is not exactly a situation where your tests pass. You just think they do. Remember that Jenkins job you created which only sends emails upon failure? The absence of a failure email might signal a successful test run. Or, once in a while, the fact that the job did not run. Either the Jenkins instance was down, or the job was simply not scheduled properly to run. In this case, since the tests did not run for a while, a bug could be easily present, without anyone realizing. Check out the episode of my comic which illustrates what my fictitious software development team decided to do to fix this.