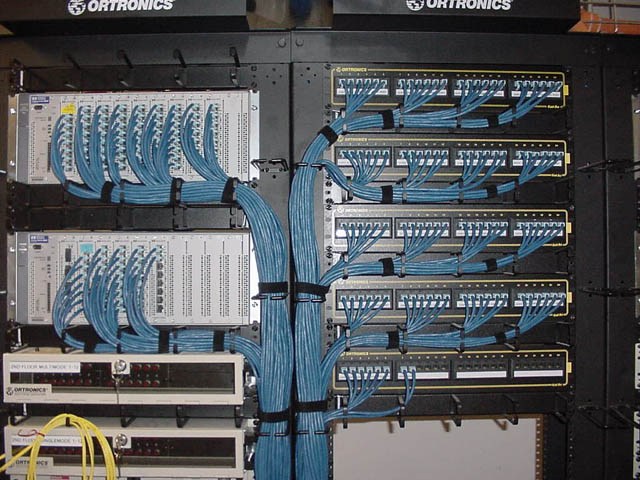

Test code can get as messy as a network closet.

Your test code is a mess. You’re not quite sure where anything is anymore. The fragility of it is causing your builds to fail. You’re hesitant to make any changes for fear of breaking something else. The bottom line is that your tests do not spark joy, as organizing guru Marie Kondo would say.

What’s worse, you’re not really sure how you got to this point. In the beginning, everything was fine. You even modeled your tests after the ones demonstrated in the online tutorials from your favorite test automation gurus. You were so proud to see these initial tests running successfully.

But you didn’t realize your test code was built upon a rocky foundation. Those tutorials were meant to be a “getting started” guide, void of the architectural complexity that, to be honest, you weren’t quite ready for just yet.

This was never meant to be a template on which to pattern all of your subsequent tests, because as is, it doesn’t scale—it’s not extensible or maintainable.

But how could you know this? Unfortunately, you couldn’t, and now your tests are littered with code smells. A code smell is an implementation that violates fundamental design principles in a way that may slow down development and increase the risk of future bugs.

The code may very well work, but the way that it’s designed can lead to more problems than it solves.

Don’t worry. It’s time for some spring cleaning to tidy up your test code. Here are seven stinky code smells that may be lying around in your UI tests, and cleaning suggestions to get rid of them.

Photo by Ashim D’Silva on Unsplash

1. Long class

To properly execute your automated test, your code must open your application in a browser, perform the necessary actions on the application, and then verify the resulting state of the application.

While these are all needed for your test, these are different responsibilities, and having all of these responsibilities together within a single class is a code smell. This smell makes it difficult to grasp the entirety of what’s contained within the class, which can lead to redundancy and maintenance challenges.

The formula for cleaning this smell is to separate these concerns. Moving each of these responsibilities into their own respective classes would make the code easier to locate, spot redundancy, and maintain.

2. Long method

The long-method smell has a similar odor to the long-class smell. It’s where a method or function has too many responsibilities. The same symptoms—lack of readability, susceptibility to redundancy, and maintenance difficulty—all surface with this smell.

Many times a method does not start off being too long, but over time it grows and grows, taking on additional responsibilities. This poses an issue when tests want to call into a method to do one thing, but will ultimately have multiple other things executed as well.

The formula for cleaning this smell is the same as with the long-class smell: Separate the concerns, but this time into individual methods that have a single focus.

3. Duplicate code

For test automation, it’s especially easy to find yourself with the same code over and over. Steps such as logging in and navigating through common areas of the application are naturally a part of most scenarios. However, repeating this same logic multiple times in various places is a code smell.

Should there be a change in the application within this flow, you’ll have to hunt down all the places where this logic is duplicated within your test code and update each one. This takes too much development time and also poses the risk of you missing a spot, therefore introducing a bug in your test code.

To remove this code smell, abstract out common code into their own methods. This way the code exists only in one place, can be easily updated when needed, and can simply be called by methods that require it.

4. Flaky locator strategy

A key component to making UI automation work is to provide your tool with identifiers to the elements that you’d like it to find and interact with. Using flaky locators—ones that are not durable—is an awful code smell.

Flaky locators are typically ones provided by a browser in the form of a “Copy XPath” option, or something similar. The resulting locator is usually one with a long ancestry tree and indices (e.g., /html/body/div/div/div/div/div[2]/label/span[2]).

While this may work at the time of capture, it is extremely fragile and susceptible to any changes made to the page structure. Therefore, it’s useless for automation purposes. It’s also a leading cause of false negative test results.

To rid your test code of the flaky-locator smell, use reliable locators such as id or name. For cases where more is needed—such as with CSS selectors or XPath—craft your element locators with care.

5. Indecent exposure

Classes and methods should not expose their internals unless there’s a good reason to do so. In test automation code bases, we explicitly separate our tests from our framework. It’s important to stay true to this by hiding internal framework code from our test layer.

It’s a code smell to expose class fields such as web elements used in Page Object classes, or class methods that return web elements. Doing so enables test code to access these DOM-specific items, which is not its responsibility.

To rid your code of this smell, narrow the scope of your framework code by adjusting the access modifiers. Make all necessary class fields private or protected, and do the same for methods that return web elements.

6. Inefficient waits

Automated code moves a lot faster than we do as humans, which means that sometimes we have to slow the code down. For example, after clicking a button, the application under test may need time to process the action, whereas the test code is ready to take its next step. To account for this, you’ll need to add pauses, or waits, to your test code.

However, adding a hard-coded wait that tells the test to pause for x number of seconds is a code smell. It slows down your overall test execution time, and different environments may require different wait times. So you end up using the greatest common denominator that will work for all environments, thereby making your tests slower than they need to be in most cases.

To clean up this smell, consider using conditional waiting techniques. Many browser automation libraries, such as Selenium WebDriver, have methods of providing responsible ways to wait for certain conditions to occur before continuing with execution. Such techniques wait only the amount of time that is needed, nothing more or less.

7. Multiple points of failure

Again, separating test code from the framework is done to ensure that classes are focused on their responsibility and nothing more. Just as the test code should not be concerned with the internal details of manipulating the browser, the framework code should not be concerned with failing a test.

Adding assertions within your framework code is a code smell. It is not this code’s responsibility to determine the fate of a test. By doing so, it limits the reusability of the framework code for both negative and positive scenarios.

To rid your code of this smell, remove all assertions from your framework code. Allow your test code to query your framework code for state, and then make the judgment call of pass or fail within the test itself.

Clean Is Best.

Freshen up your code

Smelly test code does not spark joy. In fact, it is a huge pain point for development teams. Set aside some time to tidy up your test automation by sniffing out these code smells and getting rid of them once and for all!

The original version of this post first appeared on TechBeacon.

Find Out More About Applitools

Find out more about Applitools. Setup a live demo with us, or if you’re the do-it-yourself type, sign up for a free Applitools account and follow one of our tutorials.

To read more, check out some of these articles and posts:

- How to Do Visual Regression Testing with Selenium by Dave Haeffner

- The ROI of Visual Testing by Justin Rohrman

- Webinar: DevOps & Quality in The Era Of CI-CD: An Inside Look At How Microsoft Does It with Abel Wong of Microsoft Azure DevOps

- How to Run 372 Cross Browser Tests In Under 3 Minutes by Jonah Stiennon

- Applitools Blogs about Visual Regression Testing