Agile Software Development was designed to shrink feedback loops along with shrinking time to market. Instead of delaying feedback until a ‘test’ phase, feedback is built into each layer of the product and process.

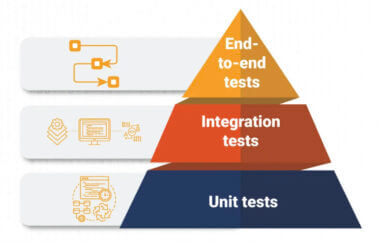

In a perfect universe, developers write unit level tests in tandem with new feature code, and technical testers write tests at the service layer. Maybe after that, crucial parts of the user interface are automated. Automation in this style is a well-designed safety net, used each time a build is performed.

Sadly, the universe is not perfect.

There is one lingering question. How can we shrink the feedback loop for the user interface to match the speed of code?

Keeping Up with Change

The typical release cycle in an “Agile” team is a compressed waterfall. Business analysts talk with customers to discover and prioritize the next couple of releases. Programmers review the requests and (hopefully) break the individual features into tasks. After writing the code, they hand off the new work to a test group to start the find, fix, retest cycle.

“Agile” in quotes looks a bit like that, it is just that the events happen in two weeks instead of six to eight months.

In this model of development, User Interface testing is usually haphazard and random. A few bugs might be found, but that is usually because we are lucky and stumbled across a button that got covered up by a new text label or a text label isn’t wrapping when the browser is resized. Not because we had a plan and intentionally looked for User Interface problems introduced by the last set of changes.

Instead of getting User Interface feedback at the speed of development, it comes random. Sometimes, the we discover these problems on the last day of a release, or worse, in production and are forced into making hard decisions about delaying a release and re-organizing work that is already in progress.

Increasing Velocity

Successful agile teams move fast because they have layers of automation that will send out an alert when a new change breaks old functionality. A unit test will probably fail if a basic calculation breaks. If the login API stops working, tests at the service layer will fail. And, if an important workflow breaks, an automated user interface will probably fail. Each layer of tests helps the development team discover problems nearly immediately in small, deliverable pieces of code.

Visual UI testing catches that last layer of UI problems – ones in which user interface just “looks wrong.” Without it, the problem could be introduced in day one of a new sprint and caught at day nine or ten – causing a conversation about delay versus non-shipping versus non-fixing, or fix next sprint. Adding visual automation makes testing changes in the user interface happen at the same fast feedback layer as unit tests and the incremental build. Better, it can be added by attaching it to tools many testers are already using such as WebDriver.

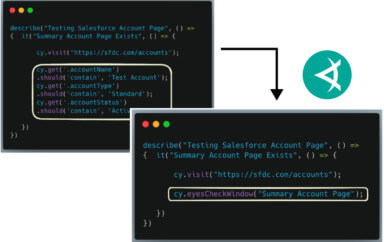

Combining a popular User Interface tool set (WebDriver, for example) and visual testing looks something like this. A picture is taken and stored every time your WebDriver test navigates to a new page. Once the set of tests is complete you have a set of pictures from whatever portion of the software you are testing with WebDriver. After the build, a small process runs that compares each baseline image with the newly captured image set. That comparison might be” pixel perfect”, just layout, just content, or some combination of criteria. Modern tools can use special comparison techniques to simulate how a person might review a webpage, as well as to give consistent and usable results.

Visual UI testing paired with automation not only gives you feedback about user interface changes every time your WebDriver tests are run, it also creates a sort of build documentation. Each saved baseline can be used as a reference for the state of the user interface at a certain point in time.

Now, rather than random blasts of information about the User Interface coming in when testers trip over it, your group is getting news with every build, based on your automated User Interface tests. The next question is – how do we make the process of looking at failures, and deciding what it important and what is OK, fast enough for an agile cadence.

A quick comparison tool that highlights the differences between baseline and the newly captured picture, where you can give a thumbs up or down, can be used in minutes. Ideally, the baseline and new image would be displayed side by side so you can visualize the differences.

Some tools can group similar differences together for group approval or rejection to make this process faster. For example, if the footer on a website is changed, and every page fails because of that change, that updated section can be approved for all pages at the same time.

I have occasionally seen WebDriver scripts pass tests that would not work if a real person were performing them. For example, once I was watching an automated script that was supposed to open a date-picker, select a value and then submit the form.

The date picker was never visibly opened during the test, but the value was still being set. A click was performed to open the picker, but the modal was being covered by something else. The WebDriver test was selecting the date value successfully through the DOM without the date picker being visible on the screen.

This type of bug would be quickly discovered when comparing images captured from visual UI testing. And, it has a chance of being discovered after every build rather than when I happen to watch the test run or stumble across the page myself. Without automation, we often discover visual problems several builds after they were introduced. This time gap can make it more difficult for developers to discover what caused the problem.

Visual UI testing takes something that used to be haphazard at best, and an emergency testing session at worst, and turns it into a regular flow of information. Instead of last minute discoveries, every build is an opportunity to perform a blink test. Your User Interface doesn’t have to be left behind.

To read more about Applitools’ visual UI testing and Application Visual Management (AVM) solutions, check out the resources section on the Applitools website. To get started with Applitools, request a demo or sign up for a free Applitools account.

Post written by Justin Rohrman:

Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association for Software Testing Board of Directors as President, helping to facilitate and develop projects like BBST, WHOSE, and the annual conference CAST.